ELDT-RP2: Sound Event Detection on Mobile Devices

Principal Investigator: Professor Roger Zimmermann, SoC

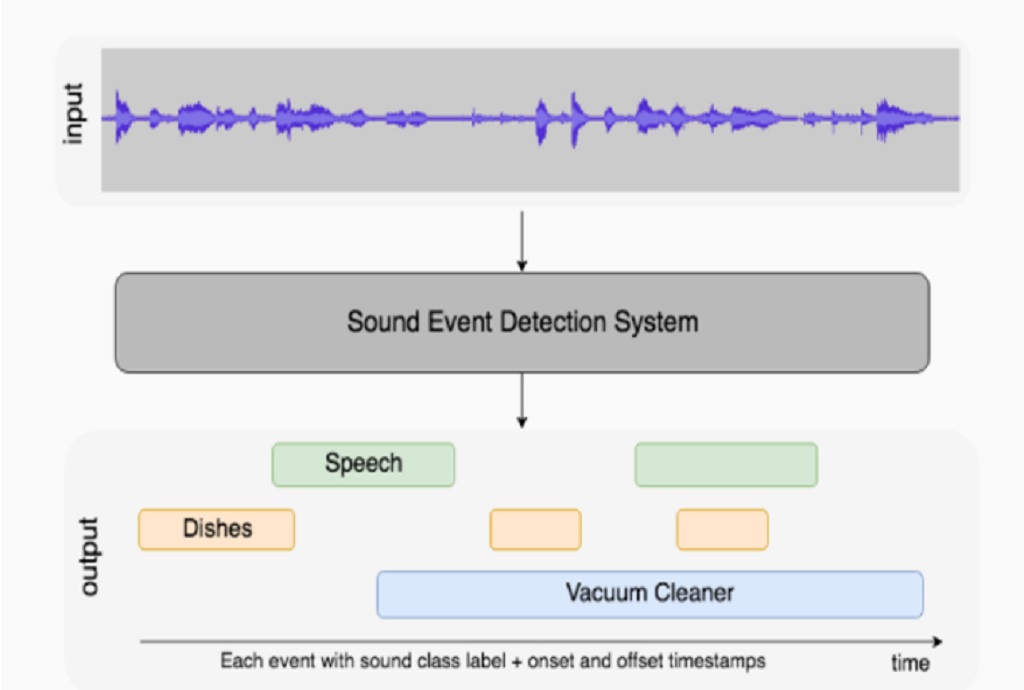

Recognizing ongoing events based on acoustic clues is a critical yet challenging problem. In this project, our goal is to design and develop high performance algorithms for real-time sound event detection that can be executed directly on mobile, wearable and IoT devices. Sound event detection aims to automatically identify the presence of multiple events in an audio scene (or recording), which is of great significance in many applications including surveillance, security and context-aware services. On research direction that we have investigated is a novel visual-assisted teacher-student mutual learning framework where we use both a single-modal and a cross-modal transfer loss to collaboratively learn the audio-visual correlations between the teacher and the student networks. The teacher network is trained with both visual and audio features and then the knowledge is transferred into the student network, which requires only audio input during inference.