Mechanical Engineering

The AI swimming academy for miniature robots

Follow CDE

PDF Download

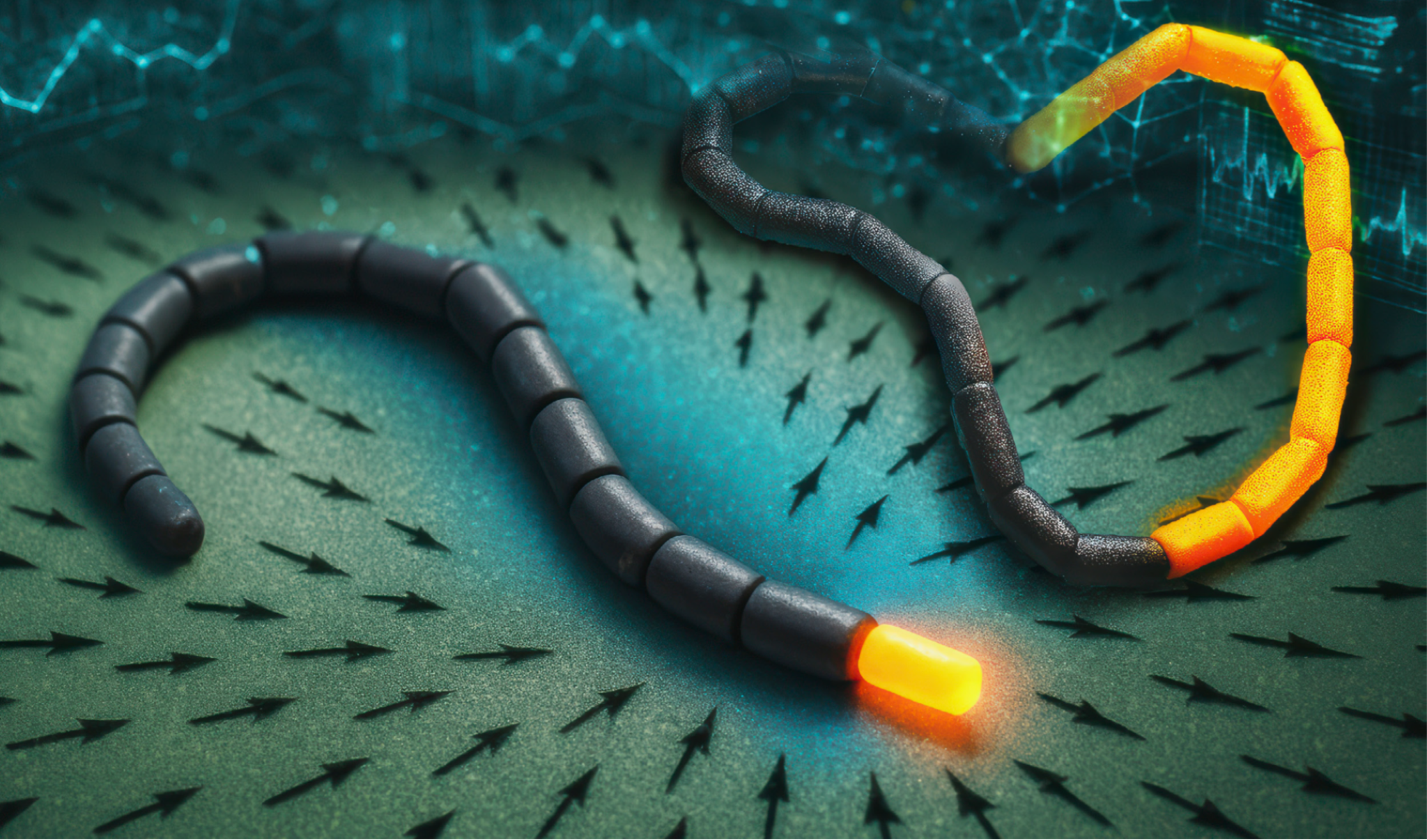

Powered by artificial intelligence, tiny robotic swimmers traverse tricky terrain by mimicking how human cells find their way.

Inside your body, there’s constant motion. Not the kind you notice, but the kind that keeps you going. Immune cells fan out like search parties, sniffing out intruders. Gut microbes wriggle towards nutrients. Sperm cells race, jostle and twist their way through the narrow corridors of the female reproductive tract. Even nerve cells, in their earliest stages, inch toward their intended destinations.

What ties these journeys together is a knack for reading the environment. These microscopic travellers respond to subtle cues, like chemical signals, to decide where to go next. Their movements are fundamentally finely tuned feedback loops: sense a whiff of what you’re after, steer that way, repeat. Biologists call this phenomenon “taxis,” and when chemicals guide the way, it’s known as “chemotaxis.”

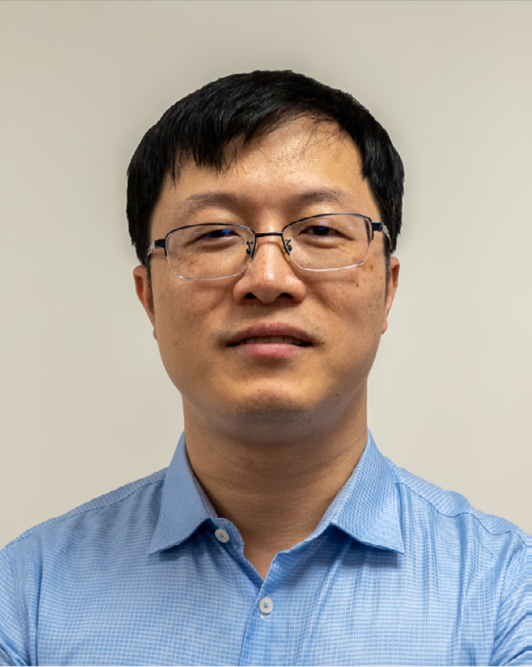

Together with his team, Assistant Professor Zhu Lailai taught simulated robotic swimmers how to traverse tricky terrain by harnessing reinforcement learning.

It’s a beautiful evolutionary trick. But could lifeless machines pull it off?

“Can the evolutionary wisdom behind these instinctive search behaviours be meaningfully translated into engineering platforms?” ponders Assistant Professor Zhu Lailai. “How might AI endow synthetic agents with such biologically informed intelligence?”

It’s a question his team at the Department of Mechanical Engineering, College of Design and Engineering (CDE), National University of Singapore set out to explore. In doing so, they may have given miniature robots a leg (or fin) up.

In a study published in Nature Communications, Asst Prof Zhu’s team taught simulated robotic swimmers how to move and steer themselves through liquid environments using a machine-learning technique called reinforcement learning (RL). While the approach was inspired by nature, the goal is squarely technological: to design tiny robots that can one day navigate the human body or other hard-to-reach spaces — on their own.

To start, the researchers designed two kinds of robot bodies. One was a linked chain of segments, resembling the whip-like tail of a sperm cell. The other formed a ring, mimicking the blobby, flexible outline of an amoeba or immune cell.

The idea for the ring-shaped “swimmer” came in a moment of epiphany. “I was in a taxi holding my six-month-old daughter after a hospital check-up, and my arms naturally formed a ring while cradling her,” recalls Asst Prof Zhu. “That’s when it struck me: what if we connected the head and tail of the chain swimmer? Would it move like an amoeba?”

Each swimmer was composed of multiple hinges, allowing it to bend, twist and squirm in fluid. But it didn’t know how to move — yet. That’s where RL came in. Using a two-level RL model, the researchers first trained the swimmers to master basic motion: how to move forward, backward or rotate using sequences of joint movements. Next, building on those motor skills, they trained the robots to “sniff out” a target chemical source and move towards it, just as real cells do when homing in on nutrients, distress signals or other chemical cues.

Fast learners

Unlike typical machine learning setups, the team didn’t hit a reset button every time the robot messed up. Instead, training was continuous, much like how learning works in nature. “In biology, you don’t get to restart from square one,” adds Associate Professor Ong Chong Jin from CDE’s Department of Mechanical Engineering, who brings his expertise in optimal control to the research team. “A cell either figures it out in the moment, or it doesn’t survive. That’s the realism we wanted to ingrain into the model.”

“A cell either figures it out in the moment, or it doesn’t survive. That’s the realism we wanted to ingrain into the model.”

“A cell either figures it out in the moment, or it doesn’t survive. That’s the realism we wanted to ingrain into the model.”

What is more, the robots didn’t rely on any GPS-like tracking or global awareness. They had to make sense of their environment using only local cues — the pressure and chemical levels around their hinges. That’s roughly equivalent to how real cells feel their way around using surface receptors, without any map or memory of where they started.

“A cell either figures it out in the moment, or it doesn’t survive. That’s the realism we wanted to ingrain into the model.”

What is more, the robots didn’t rely on any GPS-like tracking or global awareness. They had to make sense of their environment using only local cues — the pressure and chemical levels around their hinges. That’s roughly equivalent to how real cells feel their way around using surface receptors, without any map or memory of where they started.

Assistant Professor Zhu Lailai (right) with Associate Professor Ong Chong Jin (middle) and PhD student Wang Yufei (left)

In simulations, the robots managed to navigate towards targets in a range of tricky environments — even when presented with a panoply of obstacles: competing signals, swirling fluid currents or narrow passages. The chain-like swimmer, with its undulating motion, resembled how sperm cells power forward. The ring-shaped one, meanwhile, pulsed and contracted like a crawling amoeba, confirming Asst Prof Zhu’s hunch from that ride home with his daughter.

Each swimmer’s body shape influenced how it moved, and consequently how well it performed in different tasks. The ring model, for instance, struggled in very tight spaces due to its inflexible surface, whereas the chain model, with its directional asymmetry, could steer with greater finesse.

Learning to adapt

While the team’s work is still in silico — in simulation rather than in actual devices — the AI underpinning the mini robots represents broader shifts in how autonomous machines can be conceived and designed. Rather than hard-coding behaviours or hand-tuning controls, their work shows how RL can enable robotic systems to develop their own strategies, shaped by their bodies, surroundings and the task at hand.

“Robots that can learn from local cues and adapt on the fly are better equipped to operate in the unpredictable conditions of the real world.”

“Robots that can learn from local cues and adapt on the fly are better equipped to operate in the unpredictable conditions of the real world.”

“Importantly, our reset-free, two-level RL framework addresses constraints that many robots face, such as the inability to reboot or rely on global sensing,” says Asst Prof Zhu. “Robots that can learn from local cues and adapt on the fly are better equipped to operate in the unpredictable conditions of the real world.”

Looking ahead, the team is working to bring their concepts into wet-lab experiments. They’re also exploring a much wider range of body designs beyond the two studied so far. For example, with just six links, there are 27 possible shapes, most of which do not exist in nature.

“Robots that can learn from local cues and adapt on the fly are better equipped to operate in the unpredictable conditions of the real world.”

“Importantly, our reset-free, two-level RL framework addresses constraints that many robots face, such as the inability to reboot or rely on global sensing,” says Asst Prof Zhu. “Robots that can learn from local cues and adapt on the fly are better equipped to operate in the unpredictable conditions of the real world.”

Looking ahead, the team is working to bring their concepts into wet-lab experiments. They’re also exploring a much wider range of body designs beyond the two studied so far. For example, with just six links, there are 27 possible shapes, most of which do not exist in nature.

“Biological organisms evolve under many constraints, but robotic systems aren’t bound by those. RL lets us rapidly test which shapes produce effective movement, even for forms nature never tried. The miniature swimmers could even learn to change their own shape mid-task to respond to new challenges,” adds Asst Prof Zhu.

With AI as their coach, even lifeless robots may one day learn the most human skill of all — how to adapt and carry on.

Read More

View Our Publications ▏Back to Forging New Frontiers - Aug 2025 Issue

If you are interested to connect with us, email us at cdenews@nus.edu.sg