Creating Iconic Audio Experiences

With the advent of spatial audio and 3D audio effects, smart speakers now have the ability to use advanced algorithms and audio processing techniques to place sounds in different parts of a room, creating immersive audio landscapes.

However, currently these abilities still have to be controlled manually using a remote control. Common indoor positioning technologies like WiFi and Bluetooth do not have the ability to localize the listener to below a few meters in precision. Thus, we will explore the use of newer positioning technologies like Ultra-WideBand (UWB), which is capable of 10 cm precision, to localize the listener instead. We will also explore new features and use cases that can be realized with this ability, e.g. music that follows a listener as they move around a room or between rooms, location-based playlists, self-calibrating and adjusting speakers, etc.

In this studio, we will work with Bang & Olufsen (who are renowned for creating iconic audio experiences) to explore these and other technologies (e.g. flexible electronics, electronic fabrics, and innovative designs for headphones, etc.) to design and test new listening experiences.

Examples of problem statements in this studio:

- Localising listeners: how can we detect accurately how many people are in the room and where they are positioned?

- Directing music dynamically: how can we detect how many speaker products are present and control them to direct music optimally to different listeners?

- Innovative materials and mechanical designs for headphones: how can we use flexible electronics, electronic fabrics, and other innovative mechanical designs (e.g. to incorporate better air flow) to design headphones that provide a better experience?

- On-device LLM for voice control on remote controls: how might we use open-source libraries and frameworks to implement on-device voice control?

- Best use of parametric acoustic arrays: how might we use ultrasound-based directional audio to create new products and experiences?

- Creating visuals from sound: how might we use audio transparent materials to provide visual representations of music as an extension to the Beosound Shape?

Project partner:

- Bang & Olufsen

Project supervisors:

- Dr Yen Shih Cheng (sc.yen@nus.edu.sg)

- Mr Graham Zhu Hongjiao (graham88@nus.edu.sg)

Studio timeslot in Semester 2 AY2025/2026:

- Wednesday 9 am to 12 pm

Examples of past projects:

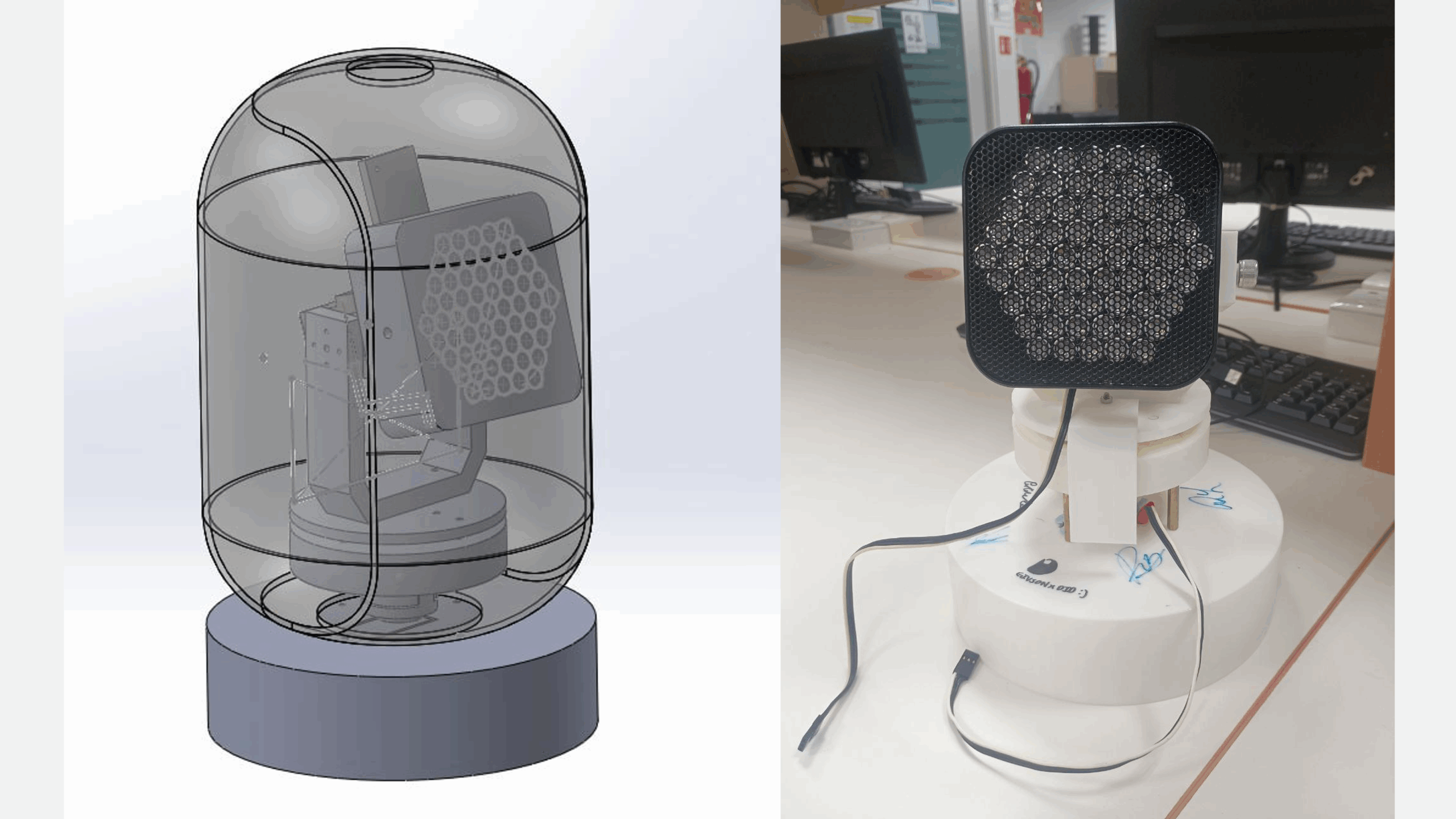

This project aims to provide golfers a hands-free, sweat-free, personalised audio experience with minimal disturbance to others by combining directional audio and ultra-wideband localisation technologies in one commercial product.

Dazzler is a system that analyses live music in real-time using signal analysis to create dynamic, synchronised light shows for performances unlike existing solutions that rely on manual control, pre-programming, or basic sound-active modes.

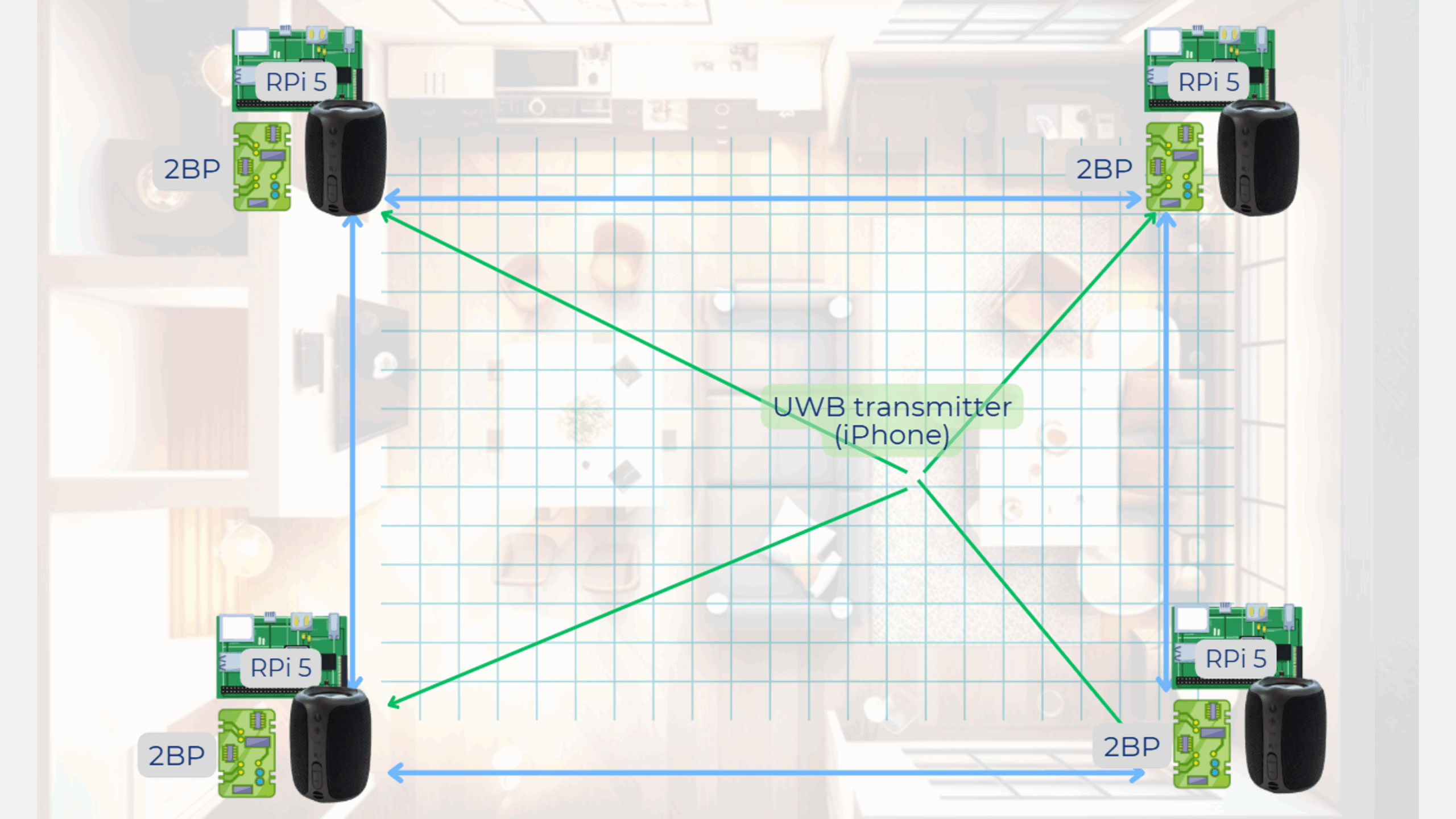

In this project, we designed a distributed system that provides live location data of users indoors, with enhanced reliability and accuracy, enabling smart audio automations and other adaptive experiences.