Low light enhancement via deep learning for autonomous systems

Project Motivation

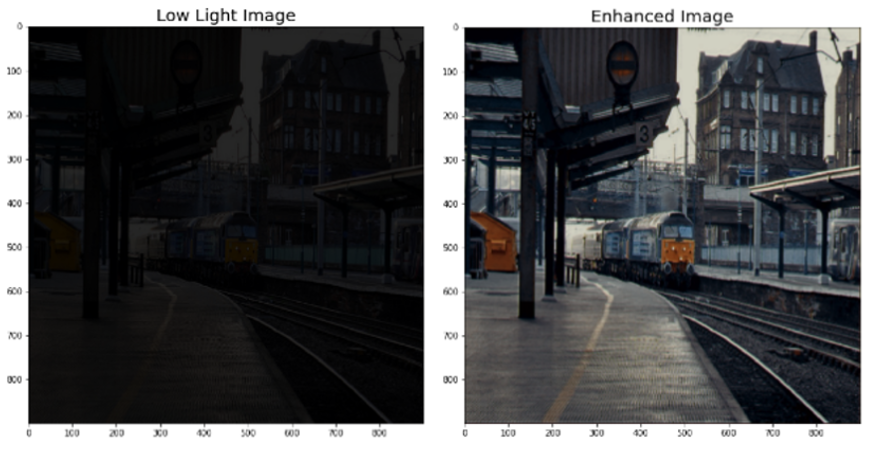

In the domain of computer vision (CV), in particular video processing, low light environment poses a multitude of problems such as a degradation in the contrast of multichromatic (RGB) input from a camera sensor as well as loss of object features. Autonomous systems such as UAV, AGV, AUV can often be required to execute missions in low light environment (e.g. night time surveillance, cave mapping, deep-sea exploration) – in which case, recovering enough operable visual information is essential for both manned and autonomous operations.

Dark image patches, low contrast and low signal-to-noise ratio will drastically increase the difficulty for CV algorithms to extract meaningful information from the images as the volume of useful and processible data is greatly constrained. Traditional methods of circumventing low light conditions include the usage of infrared thermographic sensors – which would result in a loss of features and colour information due to the output being a heat gradient map; or mounting a floodlight to light up the area of interest – which increases the all-up weight of the system and remove any possibility of a stealth reconnaissance mission being carried out.

Design

This project proposes the design and implementation of a deep learning model for enhancing video quality in low illuminated environment. Deep learning has been gaining traction for problem solving in the domain of data processing due to its capability to perform automatic feature engineering on huge unstructured datasets. This research project thus aims to explore how said advantages of deep learning can be utilized to replace traditional approaches for low light enhancement in real-time condition. Such a solution will be applicable to a myriad of autonomous systems such as land rovers, underwater vehicles and even space rovers. Beyond unmanned platforms, the same neural pipeline can also be deployed in surveillance outposts to assist in night-time visual monitoring.

A novel, deep convolutional neural network is designed and trained on a dataset of 5,000 images that is paired with a corresponding procedurally generated low light image that has a randomized degree of zoom and darkening applied. A graphic user interface was also integrated into the pipeline as a mock-up of a ground control station where human operators may remotely monitoring a real-time video stream enhanced by the neural network. This provides an outlook into the future when 5G connectivity would eventually allow for high-speed low-latency transmission of data over long distances, making offboard computations an increasingly feasible mode of real-time video processing.

Project Team

Student:

- Ng Wai Hung (Electrical Engineering, Class of 2020)

Supervisor:

- Dr Tang Kok Zuea (kz.tang@nus.edu.sg)