Final year projects for AY2025/2026

EDIC offers two final year project courses for undergraduate students, namely CDE4301 Innovation & Design Capstone and CDE4301A Ideas to Start-up.

CDE4301 Innovation & Design Capstone is a two-semester project course in which students will learn how to apply and integrate knowledge and skills acquired from preceding courses in their respective majors and/or the Innovation & Design Programme to pursue an area of innovation in a design or research project. The project may be a continuation or extension of a preceding one to deliver an integrated, improved, and optimised solution to the original problem of interest, or a fresh project that arises from a new design problem or research question. Students are expected to demonstrate a high level of independent inquiry in their project, while at the same time working effectively with their project team members, faculty advisors, and industry partners.

CDE4301A Ideas to Start-up is offered for students who are interested to pursue commercialisation of their project ideas. This two-semester course builds upon the principles of the Lean LaunchPad and the Disciplined Entrepreneurship framework to accelerate students’ understanding of the entrepreneurial process and enables further development of their ideas/projects into viable and sustainable ventures. In the process, students will learn to identify the customer and market, develop a minimum viable product (MVP) using design and engineering skills that they have garnered throughout their undergraduate study and design a viable business model. Students will also learn the mechanics of company building, for example company formation, fund raising and intellectual property strategy. At the end of the two semesters, the students deliver an investment pitch on demo day to potential investors.

Incoming Year 4 students who are not enrolled in the Innovation & Design Programme are also welcome sign up for CDE4301 and CDE4301A, and work on projects together with their peers from different majors.

For students in Design and Engineering majors, CDE4301 and CDE4301A automatically fulfil the Integrated Project pillar in the CDE Common Curriculum regardless whether they are enrolled in the Innovation & Design Programme. For those in other majors, the courses are counted as unrestricted electives.

Examples of recent past projects for CDE4301 and CDE4301A may be found on the microsite of our latest EDIC Project Showcase.

CDE4301/CDE4301A project selection exercise

The project selection exercise is now open until 13 July 2025. Submit your response via the project selection form (the link is provided below).

You can opt for one of the following in the project selection exercise:

- Continue your project from EG3301R Ideas to Proof-of-Concept as a "regular" final year project in CDE4301.

- Propose your own project (including continuation of an internship or research project).

- Choose from a list of staff/industry-proposed projects.

- Join the pre-accelerator course CDE4301A Ideas to Start-up using project from EG3301R or elsewhere.

Staff/Industry-proposed projects for CDE4301

We have a diverse range of projects that are offered by our faculty members and industry partners. They are grouped into a number of broad themes below. You can contact the project supervisors to find out more about each project.

In the online project selection form, you can choose up to 5 projects and rank them in order of preference.

Innovating for Better Healthcare

Projects in this theme aim to design better solutions to meet healthcare needs in hospitals and the community. Students learn from and work closely with healthcare professionals and academic staff to conceptualise, design, test, and develop healthcare and medical technologies.

Project supervisors: Dr Wu Changsheng (cwu@nus.edu.sg), Dr Yen Shih Cheng (shihcheng@nus.edu.sg)

This project requires students to have certain knowledge in circuit design/hardware fabrication/industrial design or software skills in embedded coding or app development. Also, this project will be in collaboration with SGH and clinicians, so we hope that students can be committed to meeting timeline and milestones.

Project supervisor: Mr Nicholas Chew (nick.chew@nus.edu.sg)

Industry partner/collaborator: Singapore National Eye Centre

Inspection of surgical instruments after they have been cleaned and disinfected is a critical task to ensure that no faulty tool is used using surgical procedures. However, this process is currently done manually in the Central Sterile Supplies Department of almost every hospital. As a result, the inspection process is laborious and physically demanding. Moreover, many surgical tools especially those used for ophthalmology surgery have very fine features which could make the inspection task very challenging. Additionally, with the annual number of day surgeries alone in public hospitals has risen from 509,000 in 2019, to 565,000 in 2022. As such, the volume of surgical instruments that must be process processed has increased significantly. It is not uncommon for large public hospitals to have to process more than 1,000 sets of surgical instruments daily.

Thus, in this project we aim to design an automated solution for inspection of surgical tools. In collaboration with our industry partner, the Singapore National Eye Centre (SNEC), we will leverage on technologies such as computer vision and artificial intelligence to enhance productivity, accuracy, and safety of the inspection process of tools used for ophthalmology surgery.

The scope of the project is as follows:

- Identify the technical requirements of the solution and constraints for deployment at SNEC.

- Review the advantages and disadvantages of existing solutions.

- Generate and validate concept designs for the proposed solution.

- Select and implement appropriate components and algorithms for the selected concept design.

- Test the proposed solution to determine its performance.

Project supervisor: A/Prof Lim Li Hong Idris (lhi.lim@nus.edu.sg)

Project Scope:

- Robotic/Motors Assistive Devices: Devices like exoskeletons or robotic arms assist patients in performing movements they might struggle with. These can help in tasks like walking, gripping, or reaching.

- Sensor Technology: These devices, equipped with motion sensors, force sensors, and sometimes cameras, track a patient's movements and progress in real-time.

- User-Friendly Interface: Patients often interact with a touchscreen or mobile app that provides guidance, instructions, and feedback.

- Adaptive Therapy: AI can analyse individual patient data, including their medical history, current abilities, and progress, to create tailored rehabilitation programs that adapt over time.

- Performance Tracking: Continuous monitoring of patient movements allows for real-time feedback on performance, helping therapists make immediate adjustments to treatment plans.

- Data Analytics: AI can aggregate data to identify trends in recovery, providing insights into practical strategies and areas needing additional focus.

- Efficiency in Staffing: Automated equipment can allow therapists to manage multiple patients simultaneously, optimizing staff resources and reducing workloads.

Project supervisors: Dr Wu Changsheng (cwu@nus.edu.sg), Dr Yen Shih Cheng (shihcheng@nus.edu.sg)

Heat stress and exertional heat illness (EHI) are significant health concerns, particularly in physically demanding environments such as military training, sports, and outdoor labour. Prolonged exposure to heat, combined with high-intensity activity, can lead to heat exhaustion, heat stroke, and dehydration, which may cause severe health complications or even fatalities. The most accurate way to assess EHI risk is through core body temperature measurement, but current methods are either invasive or limited in accuracy when using single-site non-invasive sensors. A more comprehensive and real-time monitoring solution is essential for improving heat stress management. This project will develop a multimodal multi-site (MMMS) wearable sensor network that integrates wireless, miniaturized sensors to measure core body temperature, skin temperature, heart rate, and gait variability across multiple body regions. By leveraging sensor fusion and real-time data processing, this system will enhance the accuracy of heat strain assessment, enabling early intervention and personalized risk prediction for individuals exposed to extreme heat conditions.

This project requires students to have certain knowledge in circuit design/hardware fabrication/industrial design or software skills in embedded coding/app development/data analysis. Also, this project will be in collaboration with clinicians, so we hope that students can be committed to meeting timeline and milestones.

Project supervisors: Mr Sim Zhi Min (zmsim@nus.edu.sg)

Industry partner/collaborator: Behavioural and Implementation Science Interventions (BISI), Yong Loo Lin School of Medicine

Different devices and systems are slowly incorporating AI into their systems. This however presents challenges for clinicians and engineers hoping to fine tune AI models for the future. Students will work on standard healthcare protocols and middleware systems that will help interface hardware to software, and integrate novel AI technologies to aid clinical decision making.

Project supervisors: Dr Andy Tay Kah Ping (bietkpa@nus.edu.sg)

T cell-based therapies are revolutionizing medicine, from CAR-T cell immunotherapies to next-generation applications in immune modulation, tolerance induction, and tissue regeneration. Yet, the current toolkit for engineering human T cells is dominated by viral vectors and electroporation, which impose limitations in safety, tunability, and translational scalability. This proposal introduces a modular nanoneedle-based bioreactor that enables programmable, non-viral delivery of proteins and mRNA into human T cells.

The proposed system is designed as a versatile platform that supports both discovery-oriented research and clinically relevant manufacturing, such as CAR-T production. Its programmable delivery system allows the precise modulation of T cell function and phenotype without genetic integration, offering a powerful alternative to current engineering methods. The goal is to demonstrate this flexibility through a set of functionally distinct yet methodologically unified experiments, paving the way for broader applications in immune engineering.

Project supervisor: Mr Nicholas Chew (nickchew@nus.edu.sg)

The medical devices, robotics, simulation and imaging group in E2 Lab (under the Mechanical Engineering department) have previously developed a surgical trainer more than a decade ago featuring FPGA programming for the real-time controls. With the rise and development of AI, the group is now developing the next-gen model which can incorporate open VLA models for voice activation of the robotic trainer. However, the FPGA architecture previously built into the lower level controls needs to be redesigned into an open software architecture (i.e., ROS2) to allow for some machine intelligence to be implemented into the trainer.

This project aims to replicate a scaled down prototype based on the initial surgical trainer design and assess control system responsiveness and stability for real-time controls using ROS2. The scope of the project is as follows:

- Design and manufacture a scaled down prototype of current research group's design

- Propose new controller (PC-based, GPU-based, etc) to replace FPGA modules

- Develop ROS2 control scripts for operating surgical trainer

- Analyse responsiveness (robot trajectory vs controls lag time, ROS publisher-subscriber response time, etc.) of the system

Note: Only students with formal ROS background or a robotics portfolio involving ROS will be considered for this project.

Project supervisor: Dr Tang Kok Zuea (kz.tang@nus.edu.sg)

Industry partner/collaborator: National Dental Centre of Singapore (NDCS)

The handling and sorting of dental burs is an essential yet time-consuming task in clinical settings. In the context of Singapore, all dental clinics utilize manual handling and sorting of dental burs. However, Meng et al. has pointed out that it is a time costing work, which may cause errors in classification. Meanwhile, surgical instruments are relatively sharp and have infectious substances like bloodstains and biological tissues. To address these challenges, AI vision-based handling using a robotic handler and vibratory feeding offer a potential automated solution to minimize human intervention.

Project supervisors: Dr Wu Changsheng (cwu@nus.edu.sg), Dr Yen Shih Cheng (shihcheng@nus.edu.sg)

This project aims to develop an advanced, non-invasive wireless wearable mechano-acoustic (WWMA) sensor system to monitor vocal attributes and gait patterns for the early detection of Mild Cognitive Impairment (MCI). The system will integrate high-sensitivity inertial measurement units with AI-driven signal processing algorithms to extract meaningful biomarkers from real-time speech and movement data. To validate the system, we will collaborate with clinicians to collect data alongside MRI and neuropsychological assessments. This integration ensures real-world validation, bridging engineering innovations with clinical applications for early dementia screening. The project aims to develop a scalable, cost-effective platform for broader community and healthcare deployment.

This project requires students to have certain knowledge in circuit design/hardware fabrication/industrial design/data analysis. Also, this project will be in collaboration with clinicians, so we hope that students can be committed to meeting timeline and milestones.

Innovating for Future Mobility

Projects in this theme aim to design future mobility solutions and novel vehicles to move people and goods faster, and more efficiently, and more comfortably.

Project supervisor: Mr Lim Hong Wee (hongwee@nus.edu.sg)

Industry partner/collaborator: Red Dot Mobility

Red Dot Mobility is a company specializing in electric powertrain customization for E-bikes, buggies and other electric powered mobility devices. They are looking at projects to work on integration of their products to bikes and other mobility devices. Students will get hands on work to design, fabricate, install and test real world components. Exact project scope can be discussed according to the needs of the company and the student's core knowledge.

Project supervisor: Mr Lim Hong Wee (hongwee@nus.edu.sg), A/Prof Ashwin M Khambadkone (eleamk@nus.edu.sg)

The NUS Formula SAE (FSAE) team is looking for an individual wheel dynamometer to test their 80kW race vehicle. Students will need to spec components suitable for the use case and design and prototype the dynamometer. The race car will be used to test the dynamometer. The work involves building electrical circuits, designing the frame, mounting and integration to the race car safely. Work will be distributed according to the core knowledge of the student.

Project supervisor: Mr Lim Hong Wee (hongwee@nus.edu.sg), Mr Kenneth Neo (kenneth@nus.edu.sg)

In collaboration with a start-up pioneering a new era in internal combustion engine (ICE) design, the project entails looking into a bold alternative to the century-old crank-slider mechanism. A design promising a diesel-like torque curve in a compact gasoline package. Students will validate, simulate, and shape this revolution.

Innovating for Smarter Living

Projects in this theme focus on designing smart devices and services to enhance everyday life, work, and play.

Project supervisor: (to be confirmed)

Industry partner/collaborator: TE Connectivity

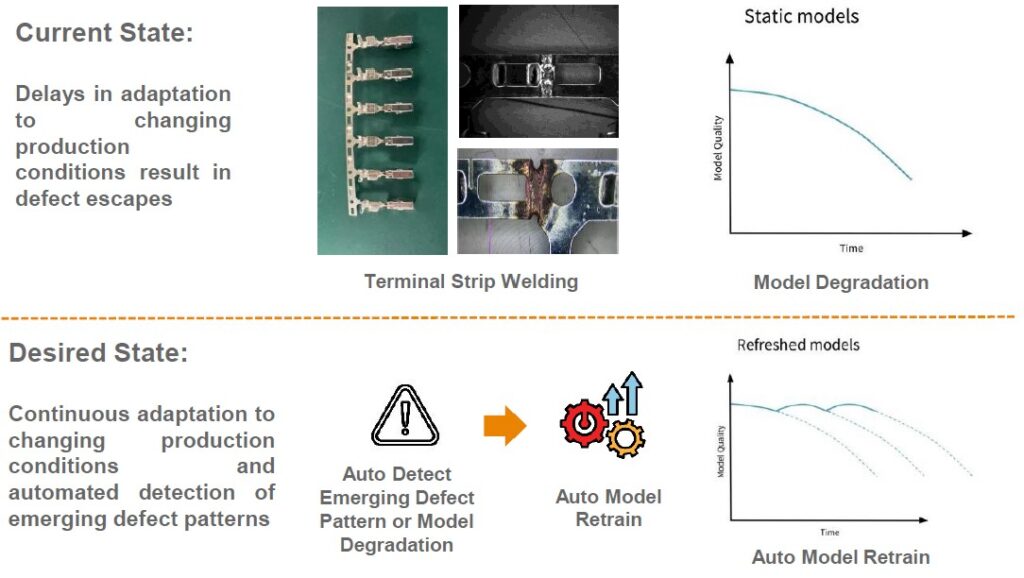

Problem statement:

- Manufacturing quality control systems struggle with accurate defect classification.

- Rare defects have limited training examples and are often missed.

- Manual retraining creates delays in detection and adaptation.

Proposed approach:

- Develop high-accuracy welding defect classification system.

- Create monitoring framework for defect pattern analysis.

- Implement automated model retraining pipeline triggered by pattern changes.

- Build visualisation tools for defect trends and system performance.

Potential innovations:

- Continuous learning system and adaptive machine learning architecture that responds to production changes.

- Anomaly detection for emerging defect patterns.

Expected/target benefits:

- Increased detection of rare defects leading to reduced flow out of defective products.

- Faster adaptation to new defect types and continuous improvement of detection accuracy.

Notes:

- Students who take up this project may have the opportunity to be engaged as interns during the project.

- Students who take up this project may be required to go for a site visit of TE Connectivity's manufacturing plant in Suzhou, China to better understand the problem and/or test their solution.

- Students who take up this project are expected to enter their project for the TE AI Cup in 2026.

Project supervisor: (to be confirmed)

Industry partner/collaborator: TE Connectivity

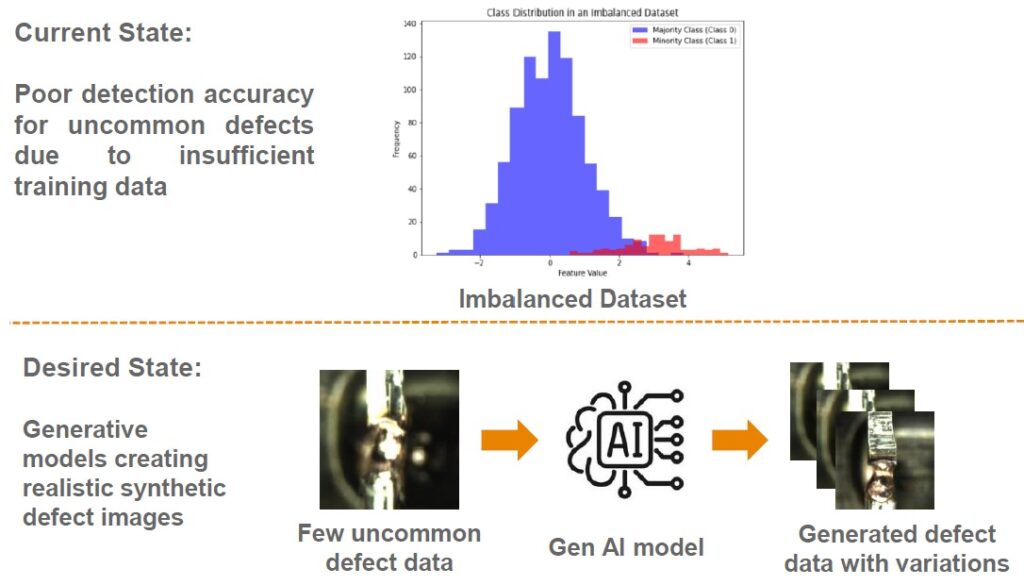

Problem statement:

- AI-based inspection systems require large datasets of defect images.

- Rare defects lack sufficient data for effective training and detection accuracy suffers for uncommon but critical defects.

Proposed approach:

- Develop generative models optimised for manufacturing defect generation with control mechanisms ensuring physical plausibility of different defect types.

- Implement systems to generate variations in orientations, lighting, and severity.

- Design validation methodologies for models trained on synthetic data.

Potential innovations:

- Generative AI with physical constraint mechanisms for realistic defect generation.

- Domain-specific data augmentation techniques.

Expected/target benefits:

- Improved detection of rare but critical defects.

- Balanced training datasets for AI inspection systems resulting in higher overall inspection accuracy.

Notes:

- Students who take up this project may have the opportunity to be engaged as interns during the project.

- Students who take up this project may be required to go for a site visit of TE Connectivity's manufacturing plant in Dongguan, China to better understand the problem and/or test their solution.

- Students who take up this project are expected to enter their project for the TE AI Cup in 2026.

Project supervisor: (to be confirmed)

Industry partner/collaborator: TE Connectivity

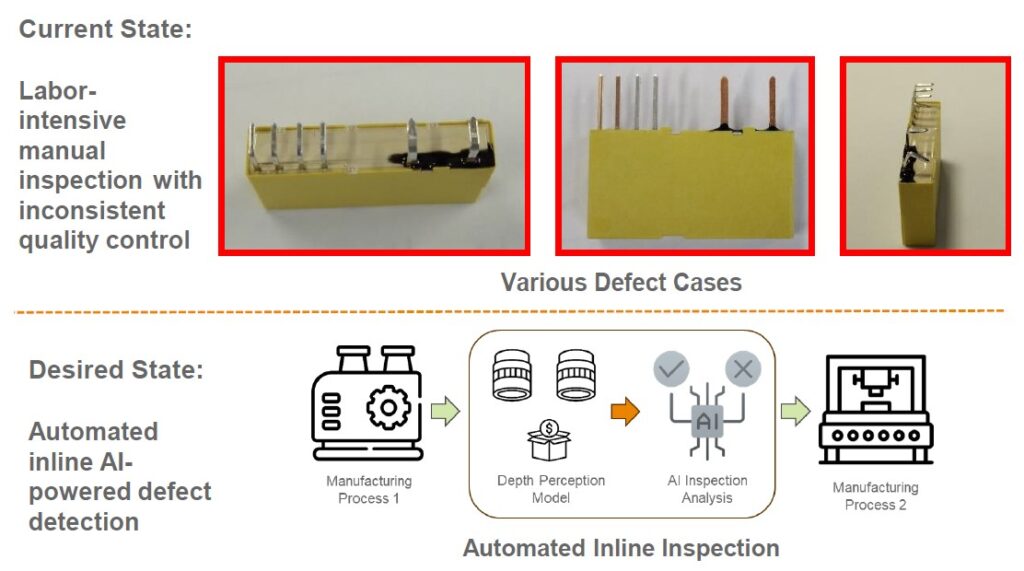

Problem statement:

- Manufacturing quality control currently lacks proper inspection capabilities.

- Labour-intensive manual microscope inspection for coil welding and relays is inefficient.

- Human inspection introduces variability and limits production throughput.

Proposed approach:

- Create AI image processing algorithms trained on production samples.

- Implement real-time processing system (>~1.5 seconds per piece).

- Establish a data collection framework that enhances AI model accuracy over time.

Potential innovations:

- Real-world application of computer vision and machine learning.

- High-resolution imaging in vibration-prone manufacturing environments.

Expected/target benefits:

- Increase production throughput.

- Reduce labour costs for manual inspection.

Notes:

- Students who take up this project may have the opportunity to be engaged as interns during the project.

- Students who take up this project may be required to go for a site visit of TE Connectivity's manufacturing plant in Trutnov, Czech Republic to better understand the problem and/or test their solution.

- Students who take up this project are expected to enter their project for the TE AI Cup in 2026.

Project supervisor: Dr Elliot Law (elliot.law@nus.edu.sg)

Industry partners/collaborators: Housing Development Board, South West Community Development Council

Recently, several town council estates in Singapore have stepped up efforts to control the population of pigeons by reducing human food sources and culling. Pigeons pose health risks as they carry diseases such as salmonella bacteria while their droppings can spread the ornithosis disease. These birds also cause damage to properties.

Pigeons are commonly found to nest on air conditioning ledges of residential buildings in Singapore. Current deterrents such as netting interferes with ledge functionality. Therefore, the goal of this project is to develop holistic solutions to deter pigeons from nesting on air conditioning ledges without hindering air-conditioner maintenance or clothes drying activities.

Students could explore:

- Technological solutions, such as targeted audio or visual deterrents that can react in a dynamic manner in the presence of pigeons.

- Physical design solutions, such as making the aircon ledges difficult for pigeons to land and nest.

- Maintenance-focused solutions, such cleaning mechanisms that allow residents to safely clear nesting materials from inside their homes.

The solution should also be:

- Weather resistant

- Cost-effective

- Easy to install

- Of minimal visual impact on building aesthetics

- Compliant with animal welfare guidelines

Project supervisor: Mr Eugene Ee (wheee@nus.edu.sg), Prof Chan Tong Leong (chan.tl@nus.edu.sg)

Wayfinding solutions are typically found in our daily lives and spans across mediums such as printed signage, concierge and digital kiosks. These aim to reduce stress for people navigating a new environment and allows them to gather spatial information to better understand their surroundings. Recent advancements in technology have shifted most wayfinding solutions towards mobile applications. This project aims to rethink how wayfinding solutions can be done using an app-less solution while maintaining their effectiveness. Students working on this project will be expected to put in practice the design thinking methodologies leading up to the development and testing of a prototype solution(s).

Project supervisor: A/Prof Mark De Lessio (mdeles@nus.edu.sg)

There is tremendous opportunity in the area of creating front end apps to interface with some of the Large Language Models that enable individuals and/or businesses to interact with them in a manner that adds value to both. I would be very interested in exploring such opportunities with students that may have ideas that fit into this category. The Automated Assessment Creation Tool project is based in the concept of how to fairly assess student comprehension based on the content delivery of the learning environment. The premise is the development of a device/app that can be used to record/scan delivered content and then interface with large LLM’s to automatically create assessments to measure the understanding of the content that has been delivered. There are a number of options to possibly achieve this through variations of AI ranging from doing key word assessment on the digitized content and then automatically determine the best assessment questions selected from a pool of pre-determined questions to actually developing the ability to automate the creation of relevant assessment material/questions based on the digitized content that has been captured. A significant goal is to ensure that the assessment is most closely based on the content that has actually been delivered in the actual classroom during the term.

Project supervisors: Dr Yen Shih Cheng (shihcheng@nus.edu.sg), Mr Graham Zhu (graham.zhu@nus.edu.sg)

Industry partner/collaborator: Bang & Olufsen

With the advent of spatial audio and 3D audio effects, smart speakers now have the ability to use advanced algorithms and audio processing techniques to place sounds in different parts of a room, creating immersive audio landscapes. In addition, by using precise positioning technologies like Ultra-Wideband (UWB) to localise the listener, these immersive experiences can potentially be much more directed and even follow a listener as they move around a room.

In this project, we will work with Bang & Olufsen (who are renowned for creating iconic audio experiences) to explore these and other technologies (e.g. on-device LLM for voice control (https://gektor650.medium.com/audio-transcription-with-openai-whisper-on-raspberry-pi-5-3054c5f75b95), parametric acoustic arrays (https://youtu.be/Fbphhg9ArXw), innovative mechanical designs to reduce discomfort while using headphones, actuating audio transparent materials in the Beosound Shape (https://www.bang-olufsen.com/en/sg/speakers/beosound-shape) to provide visual representations of music (https://youtu.be/VTEOu1p_0OU), etc.) to design and test new listening experiences.

Project supervisors: Dr Yen Shih Cheng (shihcheng@nus.edu.sg), Mr Graham Zhu (graham.zhu@nus.edu.sg), Ms Erin Ng (xin.le@nus.edu.sg)

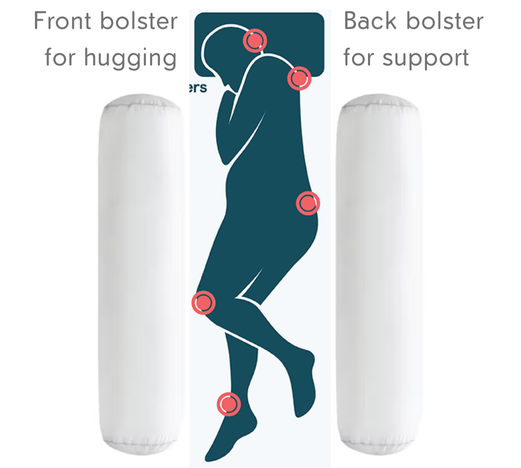

Snoring and obstructive sleep apnea is a widespread sleep problem that affects millions of individual worldwide, disrupting their sleep quality and as well as that of their bed partner's sleep quality. To address this problem, this project aims to design an ultimate anti-snoring pillow that is comfortable, effective, and practical for integration into a user's daily life and customisable according to their preferences and sleeping posture. In addition, we will also be developing a simpler and cheaper way to measure and monitor sleep quality (apnea episodes) and positions of sleep.

Project supervisor: Dr Gabriel Lipkowitz (gel@nus.edu.sg)

Industry partners/collaborators: Apple

Spatial computing offers the potential to seamlessly blend the digital and physical worlds, opening up opportunities for qualitatively novel user experiences. In the context of physical product design, such technology offers the potential to bridge the divide between digital conceptualization and ideation, and those real-world contexts in which products will be applied by end-users. However, few applications have successfully demonstrated this concept in actual practice due to challenges in designing and developing applications and content for these platforms.

This project will seek to make progress towards realizing this goal by developing applications in the context of the burgeoning, yet still nascent, ecosystem around spatial computing, an industry priority. To do so, students will develop native to the Apple Vision Pro device using the high-level and general-purpose Swift programming language, implementing and drawing upon features such as 3D model interactivity, immersive scene understanding, and real-time simulation and rendering. Students will take specific contexts including but not limited to engineering, architectural design, and product design, and develop software applications to compellingly visualize such products in a user’s spatial environment, in a manner highly sensitive to user interaction and experience. Students will aim to release an app prototype on the Vision Pro App Store, which future work will extend to conduct in-depth human-computer interaction (HCI) studies.

Depending upon the project outcome, students will have the opportunity to pitch their projects to industry partners, potentially including at the Apple Developer Centre in Singapore, along with in the broader Singapore spatial computing development community.

Project supervisors: Mr Lim Hong Wee (hongwee@nus.edu.sg), Mr Kenneth Neo (kenneth@nus.edu.sg)

The Markforged 3D printer can print with reinforced continuous fiber in their Onyx material. This increases the strength of the component in the direction parallel to the fiber. It is thus important to be able to model the strength using CAE simulations using software like Ansys for design optimisation.

Students will be conducting ASTM-based experiments on physical printed fiber-reinforced prototypes and create a CAE model using Ansys.

Project supervisor: Mr Graham Zhu (graham.zhu@nus.edu.sg)

Industry partners/collaborators: Movement for the Intellectually Disabled of Singapore (MINDS), Down Syndrome Association (Singapore), Engineering Good Ltd

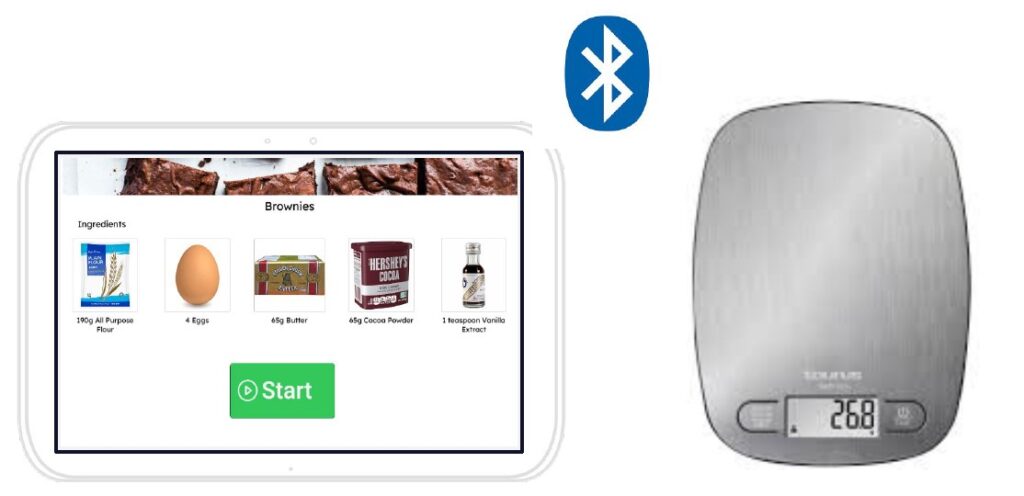

This project aims to support persons with intellectual disabilities (PwID) to perform the task of weighing baking ingredients, as part of vocational training and for inclusive employment jobs. Many PwID with Down Syndrome are literate and have difficulty processing numbers - they are not able to read recipes and understand for example whether a number like 500g is more or lesser than 450g. Most though are able to understand and follow verbal instructions.

To address this problem, this project aims develop a mobile app with the following features that can be paired with a weighing scale:

- Enable trainers to add in recipes

- User guided with step-by-step audio instructions

- Connect wirelessly with a weighing scale

- Determines whether required weight is reached and provides corresponding audio instructions

Students who take up this project will work closely with the industry partners and their clients or beneficiaries to co-develop and test the proposed solution.

Project supervisors: Mr Kenneth Neo (kenneth@nus.edu.sg), Mr Lim Hong Wee (hongwee@nus.edu.sg)

With the growing trend of carbon fibre composite products, innovative solutions are high in demand to reduce manufacturing time, cost and waste. The mould for composite products are the basics of composite tooling and can be very expensive. Students will learn and investigate the composite manufacturing process and trends. With sufficient knowledge, students derive innovative solutions for composite manufacturing to reduce manufacturing costs and reduce waste.

Project will go through proper engineering work like materials selection, CAD design, optimisation studies, manufacturing techniques and testing.

Project supervisor: A/Prof Lim Li Hong Idris (lhi.lim@nus.edu.sg)

Industry partner/collaborator: Tertiary InfoTech

This project utilizes Jetson Nano Super and a combined Large Language Model (LLM) and Vision Language Model (VLM), to control a robotic arm. The project involves integrating the LLM and VLM to interpret natural language commands and act on vision language models, which is translated into precise control instructions for the robotic arm. This allows for precise control of the robotic arm’s movements.

In a team, you will develop a simulation, which allows for rapid prototyping and test. Following which, you will develop and demonstrate the integration through the control of a robotic arm. Foundational examples in LLM and VLM have been developed through two separate UROP projects. An integrated approach to using the LLM and VLM models will deliver benefits in training the LLM. The LLM can be asked to perform a pick and place motion with an object through text-based inputs. The LLM needs to describe this object based on the tokens identified. The VLM can identify the objects within the workspace to generate textual content, which can be compared with the LLM tokens. The similarity between the LLM and VLM can be used to train the LLM. You will have an opportunity to work with an industry partner on this group project.

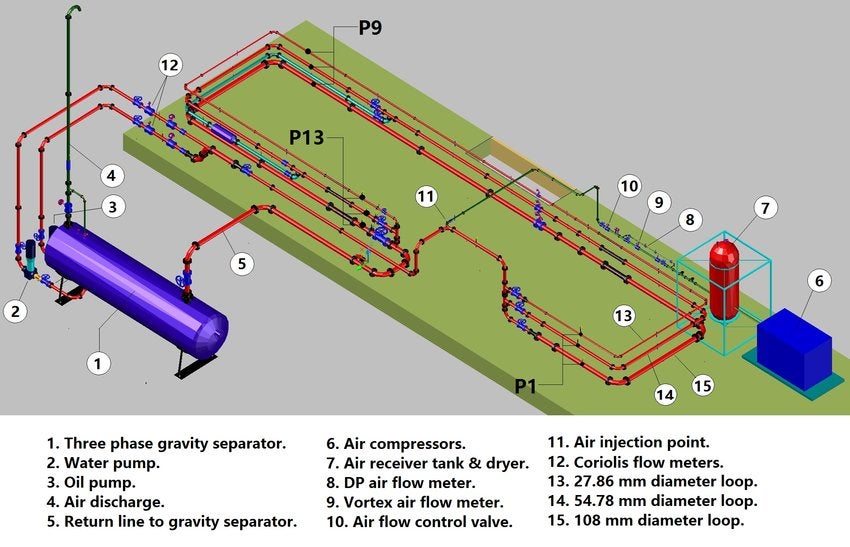

Project supervisors: Mr Royston Shieh (shiehtw@nus.edu.sg), A/Prof Loh Wai Lam (mpelohwl@nus.edu.sg)

Multiphase flows are commonly encountered in the oil and gas, chemical, and power generation industries. The transition between different flow regimes — such as stratified, slug, annular, or bubbly flow — can lead to complex transient forces acting on the internal surfaces of pipelines and flow equipment. These flow regime-induced forces can result in vibration, fatigue, and failure if not properly understood and mitigated.

This project aims to investigate the hydrodynamic forces generated by different flow regimes in horizontal or near-horizontal pipe segments. Students will employ a combination of Computational Fluid Dynamics (CFD) and experimental methods at the NUS Multiphase Oil-Water-Air Flow Loop Facility to characterise and quantify these forces under varying flow conditions.

This project is suitable for Mechanical, Chemical, and Civil Engineering students who are interested in flow assurance. Students who wish to take up this project should have completed a course in fluid mechanics (e.g. ME2134, ME2135) prior to Year 4.

Project supervisor: A/Prof Mark De Lessio (mdeles@nus.edu.sg)

With rotary telephones tossed on the trash heap of a by gone era the concept of dialling has been replace by most users interfacing via a key pad with their mobile telephones. However, many people struggle with interfacing with their mobile phone in an efficient and quick manner because of the small size of the key pad used. This project proposes researching the availability of a technical solution that can be used to address this issue. For example, would it be possible to incorporate holographic technology or a similar technology to expand the key to enable an easier interface with the key being selected as it is approached by the finger/thumb doing the selection. Perhaps there is an alternative technology that could be incorporated to achieve the same result.

Project supervisors: Dr Aleksandar Kostadinov (aleks_id@nus.edu.sg)

Background

Timely, localized plant health data is critical for reducing input waste and improving crop yields. Biosensors can provide real-time, in-situ monitoring of plant stress, nutrient levels, or disease, yet their integration into field systems remains underdeveloped.

Aims

To explore, design, and test low-cost biosensor concepts for detecting key plant health indicators (e.g., soil, water, and ambient properties; plant health; pest and pathogen presence).

Methodology

This research-oriented project will involve a survey of current biosensor technologies, selection of target biomarkers, and conceptual design of sensor units. Depending on scope, lab testing or simulation of sensing mechanisms may be conducted. Collaboration with researchers from various departments within NUS will be encouraged in which existing technologies can be used for further development.

Deliverables

- Technology review

- Biosensor design concepts

- Proof-of-concept setup (lab or simulation-based)

- Feasibility analysis

- Research paper-style report and showcase poster

Note:

The proposed topic, methods, and deliverables are tentative and intended as a starting point. Depending on the team’s size, interests, and strengths, both the direction of the project (research or development) and its specific outputs (hardware, software, principles) can be adapted accordingly. Students are encouraged to explore related avenues if they find them more compelling.

To support this process, a selection of handbooks and manuals is provided to help kickstart research and frame initial explorations:

Project supervisor: A/Prof Aaron Danner (eleadj@nus.edu.sg)

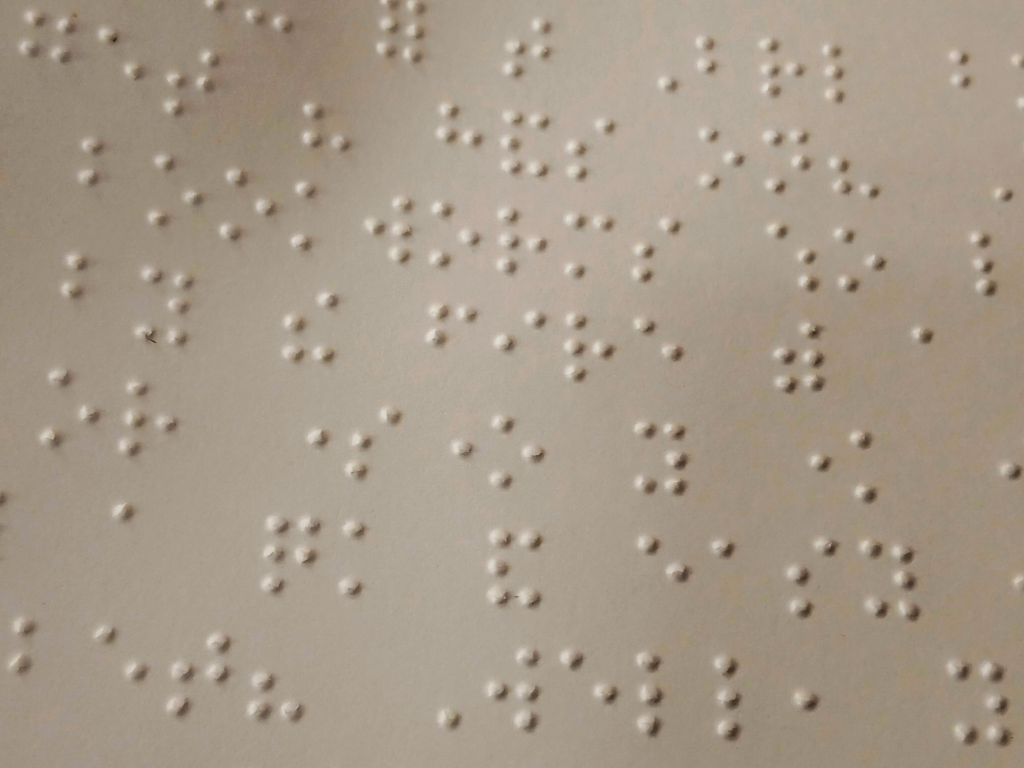

Current computerized braille displays are extremely limited in their capabilities - single line 40-character displays currently cost over S$3000 each, a multiline display like the Monarch (only 10 x 32 characters) costs a whopping US$17,900.00. The reason is because these displays have thousands of small moving parts. Let's investigate a simpler method of fabricating a display by using methods borrowed from microelectronics. We will make use of shape memory alloys (phase change materials) which have the required deformation properties, and engineer a metallic sheet to deform appropriately when a voltage is applied in such a way that a braille dot is raised or lowered at the correct position. We can then investigate whether photolithography can be used to create matrix addressable dots by applying voltages at the correct positions. This project is challenging because it's likely not easy to achieve the required braille dot deformation as phase change materials are notoriously tricky to work with, but it's definitely physically possible and thus worthy of investigation. The benefit of a positive outcome is that we can then envision large braille displays with no moving parts (other than the dots themselves) manufactured at low cost using monolithic fabrication techniques. This would be extremely beneficial for the visually impaired.

Project supervisor: Mr Nicholas Chew (nickchew@nus.edu.sg)

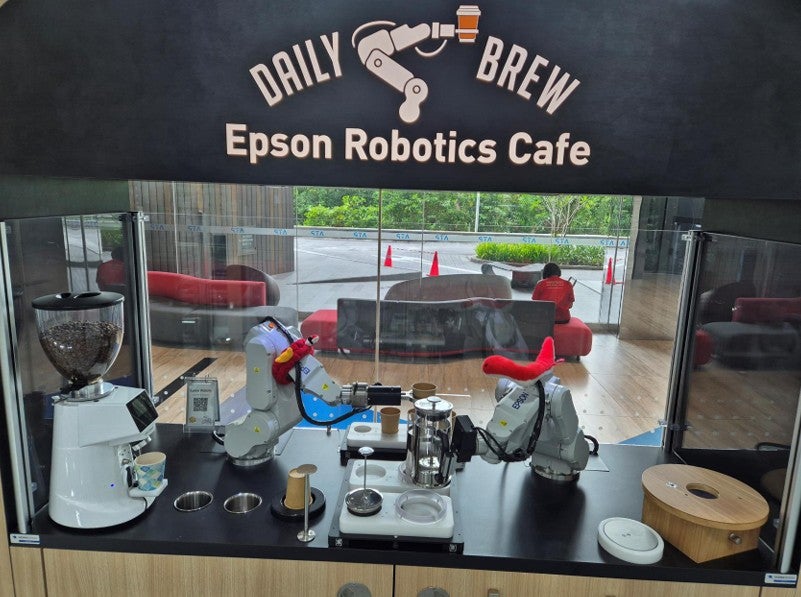

Industry partner/collaborator: Epson Robotics

In our tech-driven world, automation and robotics are revolutionising various industries, including food and beverage services. The traditional French press coffee-making process, which takes about 10 minutes to prepare just 4 cups, is an ideal candidate for automation. This project aims to optimise the coffee-making process by improving the motion path planning of two Epson 6-axis robots, thereby reducing cycle time and enhancing operational efficiency. A demo machine was previously developed for an event at the Singapore Museum. This project offers an exciting opportunity to refine the coffee-making process, further develop this robot-based system, and create a solution that can be showcased both locally and internationally at exhibitions, promoting the future of "manless" coffee making.

Project Objectives:

- Reduce Coffee Preparation Time: Streamline the process and reduce the preparation time to just 1-2 minutes.

- Automation and Stable Operations: The machine must operate autonomously and ensure consumables (paper cups, water, coffee beans, etc.) can be stored for up to 3 days of continuous coffee-making without requiring human intervention.

- Network Architecture Improvement: Removes the BnR PLC and transitioning control to the robots themselves. Digital inputs and outputs will be connected to each robot controller to establish the necessary communication handshake between the machines.

- Explore Innovative End-Effector Designs: Students are encouraged to think creatively and come up with new and innovative end-effector designs to streamline the coffee-making process, improving overall system efficiency.

- Robot Specifications: The system will be limited to two Epson 6-axis robots, each with a 4 kg payload capacity and a 600mm arm reach, making it necessary to optimize the design and operational processes within these constraints.

Project supervisor: A/Prof Mark De Lessio (mdeles@nus.edu.sg)

There is tremendous opportunity in the area of creating front end apps to interface with some of the Large Language Models that enable individuals and/or businesses to interact with them in a manner that adds value to both. I would be very interested in exploring such opportunities with students that may have ideas that fit into this category. The “Smart Advisor Tool” consists of a front end tool that assist individuals to assess what their personal interest are and how that might correlate to the best degree programs that would enable them to realize these interests as working adults. This would essentially leverage AI to categorize their interests, identify relevant degree programs, identify the types of jobs available to individuals with similar interests, identify the best universities that offer such programs and eventually even perhaps the organizations that have such jobs available. There would be many ways to monetize such a tool including working with Universities to advertise their programs and/or partnering with job placement agencies or even with organizations themselves to push potential candidates to them that have already been vetted and matched with positions they currently are seeking to fill.

Innovating for Sustainable Cities

Projects in this theme delve into the challenges of urbanisation and sustainability. They focus on improving liveability in the urban environment and management of limited resources such as water, energy, and land in an efficient and sustainable manner.

Project supervisors: Dr Elliot Law (elliot.law@nus.edu.sg), Dr Jovan Tan (jovantan@nus.edu.sg)

Industry partner/collaborator: the moonbeam co.

the moonbeam co. is gaining momentum in the food tech industry by transforming spent coffee grounds into baked goods. Currently the company manages centralised waste streams (such as from large-scale brewing operations) but there are significant untapped resources from decentralised waste such as spent grounds from espresso machines used in cafes and smaller food and beverage establishments.

This project aims to develop a proof-of-concept solution that can efficiently process such decentralised waste streams on-site and convert them into baked good. The challenge in this project is to decentralise the moonbeam co.'s existing process, enabling local processing without relying on a central hub, while maintaining product quality, scalability, and sustainability. The scope of work may include the following:

- Designing a system that collects, processes, and integrates spent espresso grounds into a consistent baking mix.

- Prototyping a machine that can autonomously operate in smaller cafes or offices.

- Ensuring the process is energy efficient and retains the nutritional properties of the grounds.

- Scaling the solution in a manner that ensures cost-efficiency as operations are expanded.

Project supervisor: Dr Aleksandar Kostadinov (aleks_id@nus.edu.sg)

Background

Conventional greenhouses often fail in hot, humid regions due to overheating and poor ventilation. Tropical climates demand alternative protected cultivation systems that are low-cost, passive, and context-adapted.

Aims

To design a climate-responsive protected cultivation system optimized for tropical agricultural use, balancing thermal regulation, airflow, and crop protection.

Methodology

The team will begin with contextual research on tropical agriculture and climate data. Design exploration will consider materials, passive ventilation, shading, and modularity. Prototyping may focus on scale models or digital simulations (e.g. CFD for airflow). Stakeholder input may be gathered from local growers or AgriTech solution developers.

Deliverables

- Climate and user needs analysis

- Design proposals (sketches, models)

- Prototype (physical or digital)

- Performance simulation/validation

- Design report and showcase poster

Note:

The proposed topic, methods, and deliverables are tentative and intended as a starting point. Depending on the team’s size, interests, and strengths, both the direction of the project (research or development) and its specific outputs (hardware, software, principles) can be adapted accordingly. Students are encouraged to explore related avenues if they find them more compelling.

To support this process, a selection of handbooks and manuals is provided to help kickstart research and frame initial explorations:

Project supervisor: A/Prof Lim Li Hong Idris (lhi.lim@nus.edu.sg)

Industry partner/collaborator: E&ST Environmental Pte Ltd

Project objective: develop and fabricate a concentrated solar thermal (CST) system with applications in distillation and production of biochar.

Project Scope:

- Explore innovative approaches to increase the energy concentration at light spots and effective energy transfer to the receiver medium. This involves consideration of the irradiance, relative humidity, sun’s path across the year, design, sizing and material selection of the reflectors and receiver. This is an iterative process to achieve a higher amount of heat on the receiver.

- Develop strategies to apply the CST system in the following areas: (1) distillation of treated wastewater effluent and rainwater, (2) use in pyrolytic processes for production of biochar. The system should achieve a temperature of at least 250 degrees Celsius on the receiver.

- Fabricate a scalable prototype (1m by 1m) with an emphasis on costs, viability, lifecycle, and efficiency. Heat storage is not required for this prototype.

- Evaluate the performance of the prototype and calculate the feasibility and potential cost savings on the following: (i) water distillation via solar thermal heating vs membrane filtration. (ii) biochar production from pyrolysis of biomass in the absence of oxygen under high temperature. Biochar is a method of carbon sequestration and the manufacture of which potentially generates carbon credits for stakeholders.

You will be working with a team of UROP students, academic and industry supervisor, who will provide you with support on this project.

Project supervisors: Dr Elliot Law (elliot.law@nus.edu.sg), Dr Jovan Tan (jovantan@nus.edu.sg)

Industry partner/collaborator: Greenairy Pte Ltd

Indoor air pollution is a serious but invisible problem, especially in closed spaces like makerspaces, studios, and offices, where toxic gases from 3D printers, solder, laser machines, solvents, and cleaning agents build up fast.

This project challenges you to optimise a smart air-purifying plant tower that uses real-time sensors + nature-based methods to remove toxic gases better than conventional air purifiers. You can work on both the hardware (airflow optimisation, electronics, sensor integration, modular design) and software (IoT dashboard, sensor data processing, web app gamification, machine learning for purification performance) needed to optimise the system. There is also possibility for microbes research based on the interest and expertise of the students.

The system is connected to a companion app for students, where they can grow microgreens and learn about sustainability through mini-games and hands-on experiences. If you’re excited about climate tech, health, start-ups, and real-world applications of IoT, you can work on a solution that is being tested in schools and workspaces across Singapore, helping people breathe cleaner air and engaging students in sustainability.

Those who are interested to work on this project should ideally have prior experience with one or more of the following:

- Arduino or ESP32 and sensor interfacing

- Machine learning

- Web-app development (e.g. React, Firebase)

- 3D modelling or prototyping (e.g. SolidWorks, Fusion360)

Project supervisor: Dr Tang Kok Zuea (kz.tang@nus.edu.sg)

Industry partner/collaborator: 800 Super

There are many different scheduling tools available to perform waste removal in a city environment. Customised and built-in solutions are costly and not easily reconfigurable. In this project, sustainable solution to optimise a fleet of waste truck is to be developed.

Innovating with Immersive Reality

Projects in this theme aim to develop novel applications of virtual reality and augmented reality to serve the unmet needs in industry verticals such as healthcare, education and entertainment.

Project supervisor: A/Prof Khoo Eng Tat (etkhoo@nus.edu.sg)

Industry partner/collaborator: NUS Faculty of Dentistry

Effective communication is crucial in dentistry for patient care and treatment success. However, many dentistry students face challenges in developing communication skills due to limited opportunities for practice with real patients. To address this, we propose the development of an AI Simulated Patient system tailored for dentistry student communication training. This project integrates emerging technologies including generative AI (Gen-AI), Large Language Models (LLM), and Virtual Reality (VR) to create realistic simulated patient interactions.

Students in this project may design and develop one or more of the following scopes:

- Utilise Gen-AI to create realistic virtual patient scenarios based on diverse demographic profiles, dental conditions, and communication challenges commonly encountered in clinical practice.

- Implement LLM to enable natural language processing for realistic and contextually appropriate dialogue between students and virtual patients.

- Integrate VR technology to provide an immersive environment where students can engage in simulated patient consultations.

- User study and analytics/assessment of students’ learning.

The project will be supervised by Associate Professor Khoo Eng Tat and supported by the research fellow and engineers from the Immersive Reality Lab. It will be carried out in collaboration with Associate Professor Wong Mun Loke from the NUS Faculty of Dentistry. Example of immersive reality (VR/MR) and generative AI (Gen-AI) student projects completed under Immersive Reality Lab can be found on the website https://cde.nus.edu.sg/edic/projects/innovating-with-immersive-reality/.

Project supervisor: Dr Cai Shaoyu (shaoyucai@nus.edu.sg)

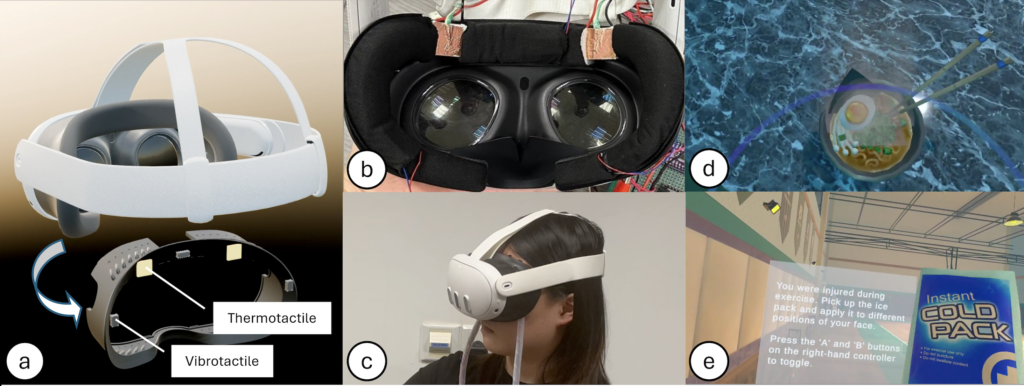

In this project, you will explore sensory augmentation via haptic feedback on the face and nose, focusing on smell augmentation, while investigating the effects of smell modification illusion through temperature feedback in psychological experiments.

Project supervisor: Dr Cai Shaoyu (shaoyucai@nus.edu.sg)

In this project, you will develop a pneumatic-based haptic interface system that generates vibrotactile stimuli within the human-perceivable frequency range to enhance tactile texture perception of physical material surfaces.

Project supervisor: A/Prof Khoo Eng Tat (etkhoo@nus.edu.sg)

This project is dedicated to pushing the boundaries of immersive technologies, generative AI, and large language models (LLMs). Students can explore how these technologies can be integrated to transform VR/AR simulation systems used in sectors like medical education, aviation training, and elderly care. Students will investigate the current limitations of immersive systems, design novel solutions to enhance realism, interactivity, and usability, and pioneer new use cases. A key emphasis will be placed on user experience (UX) design, real-time AI-driven feedback, and human-AI interaction.

Students joining this project will have the flexibility to either select from a curated list of project topics or propose their own ideas that align with the studio’s themes. Under the guidance of A/Prof Khoo Eng Tat and the Immersive Reality Lab team, students will work closely with industry partners to develop real-world solutions that innovate beyond today's capabilities.

Proposed areas of exploration:

- VR/AR applications for medical, nursing, and aviation training

- Simulating realistic AI-driven virtual characters using GenAI and LLMs

- Auto-feedback and skill assessment systems powered by GenAI in healthcare training

- Computer vision and machine learning for object detection, tracking, and 3D reconstruction

- Simulating and sensing emotions in virtual human interactions

Problem statements (students may choose or propose their own):

- Virtual Paediatric (Infant) Patient Simulation — Create an immersive VR experience for training in pediatric emergencies, focusing on respiratory distress scenarios. (Collaboration with Centre for Healthcare Simulation)

- AI Social Companion Robot/Pet for Elderly — Design a virtual or physical AI companion for emotional support and engagement of elderly individuals. (Collaboration with NUS Nursing)

- AI Co-Pilot in VR Simulator for Pilot Competency Training — Integrate an AI-driven virtual co-pilot to enhance decision-making and skill development in pilot training simulators. (Collaboration with Singapore Airlines-NUS Digital Aviation Corp Lab)

- Emerging Applications of GenAI and VR/MR — Students propose a novel idea combining GenAI, VR, and MR technologies to solve a real-world challenge or create a new experience.

Project supervisor: Dr Tang Kok Zuea (kz.tang@nus.edu.sg)

Industry partner/collaborator: Singapore Eye Research Institute, Singapore National Eye Centre

Gaze tracking and speech recognition will be incorporated on a VR platform to capture users’ inputs in a medical setting. Such functions will aid the doctors to diagnosis patients’ conditions in several medical settings like emergency medicine and triaging.

Project supervisor: A/Prof Khoo Eng Tat (etkhoo@nus.edu.sg)

Advancements in full-body motion sensing and generative AI are opening new frontiers in the art and science of dance. Traditional dance practice often relies on repetitive rehearsals and instructor feedback, but dancers face challenges in achieving precise technique refinement and exploring creative movement variations.

This project aims to create an innovative system that integrates VR-based immersive full-body tracking with Generative AI-driven choreography. The goal is to empower dancers to train anywhere, receive real-time, AI-guided feedback, and collaborate with AI to generate new, optimized choreography. By blending immersive reality and creative AI generation, the project envisions a future where human dancers and AI co-create, enhance technical mastery, and expand artistic boundaries.

We will collaborate with student dance groups to collect real-world motion data and evaluate the system's impact on choreography development and learning outcomes.

Students participating in this project can choose to contribute to one or more areas, including:

- Full-Body Pose Estimation: Develop real-time motion tracking models using VR devices (e.g., VR controllers, body sensors) to accurately capture dance movements.

- Generative AI for Choreography: Apply GenAI techniques to create, modify, and optimize dance sequences based on sensed body motions, proposing novel movement patterns and variations.

- Immersive VR Environment Design: Build VR spaces where dancers can interact with AI-generated feedback, practice performances, and experiment with choreographic ideas.

- User Research and Evaluation: Design and conduct user studies to assess how AI-assisted choreography impacts movement quality, creativity, technical refinement, and the dancer’s learning experience.

Project supervisor: A/Prof Khoo Eng Tat (etkhoo@nus.edu.sg)

Industry partner/collaborator: NUS Alice Lee Centre for Nursing Studies

A study by Goodman et al. (2023) demonstrated that the accuracy and completeness of generative artificial intelligence (Gen-AI) chatbot responses to diverse medical question types and difficulties were rated high by physicians. Integrating Gen AI and virtual reality (VR) simulations in nursing education have tremendous potential of enhancing clinical reasoning and interprofessional communication skills for the nursing students. These technologies provide immersive, realistic, and scalable training solutions that bridge the gap between classroom learning and real-world clinical practice.

Students in this project will design and develop Gen-AI agents and multiusers VR simulation to improve nursing students’ clinical reasoning and interprofessional communication skills. User study will be employed to test the feasibility of the Gen-AI powered VR simulation. Accuracy and ethical aspects of Gen-AI may also be explored, including strategy on fine tuning LLMs with nursing domain specific content.

The project will be supervised by Associate Professor Khoo Eng Tat and supported by the research fellow and engineers from the Immersive Reality Lab. It will be carried out in collaboration with Professor Liaw Sok Ying from the Alice Lee Centre for Nursing Studies (NUS Nursing), NUS Yong Loo Lin School of Medicine. Example of immersive reality (VR/MR) and generative AI (Gen-AI) student projects completed under Immersive Reality Lab can be found on the website https://cde.nus.edu.sg/edic/projects/innovating-with-immersive-reality/.

Project supervisor: A/Prof Khoo Eng Tat (etkhoo@nus.edu.sg)

As human-AI interaction becomes more pervasive in industries such as healthcare, customer service, and aviation, the ability for AI agents to simulate and respond to human emotions will be critical for realism, effectiveness, and empathy. This project, Generative AI Human, explores the creation of AI-driven virtual agents capable of both simulating human-like emotional expressions (e.g., anger, frustration, compassion) and detecting the emotional states of users to adapt their responses accordingly.

Students will work on two intertwined challenges:

Simulating emotions in AI agents

Develop intelligent agents that can generate nuanced emotional behaviours, such as anger or impatience, critical for realistic training scenarios (e.g., angry customer simulations for service training, distressed patient simulations for medical training). This includes voice tone modulation, facial expression animation, and contextual behaviour adaptation using generative AI and LLM-based techniques.

Emotional detection and feedback loop

Implement systems capable of detecting user emotions through computer vision (facial recognition), audio analysis (speech tone/sentiment), or physiological data. These insights can be fed back into the agent’s behaviour to create a dynamic two-way emotional interaction.

Students will have the opportunity to contribute to both technical development (e.g., building emotion models, integrating sensing technology) and user experience design (e.g., ensuring the interaction feels natural and realistic). Applications range from customer service training simulations to healthcare communication skills education.

Possible areas of focus (students may specialize based on interest):

- Emotion simulation using LLMs and multimodal generative AI (text, voice, and facial synthesis)

- Computer vision and audio analysis for real-time emotion detection

- Real-time adaptation of agent behaviour based on detected emotional cues

- User studies to evaluate realism, effectiveness, and user experience of emotional agents

- Ethics and bias mitigation in emotional AI systems

Potential use cases:

- Customer service training: Simulating angry or dissatisfied customers for roleplay-based training.

- Healthcare communication training: Simulating distressed patients or anxious family members for doctors and nurses.

- Aviation crew training: Simulating emotional passenger interactions for cabin crew practice.

- Elderly care support: Creating empathetic AI companions that detect loneliness or sadness.

Project supervisor: A/Prof Khoo Eng Tat (etkhoo@nus.edu.sg)

Industry partner/collaborator: NUS Yong Loo Lin School of Medicine

This project aims to revolutionize obstetrics and gynaecology (O&G) childbirth delivery training using immersive simulation by transforming how medical professionals learn and acquire competency in birth delivery procedures. Our innovative Virtual Reality (VR) and Mixed Reality (MR) Birth Delivery Training platform offers an unparalleled opportunity for medical students to immerse themselves in realistic and interactive simulations of childbirth scenarios.

Students in this project will design and develop new training scenario for O&G doctors, medical students, mid-wives and nursing students. Students will gain exposure to immersive reality technology and generative AI using large language model to create a dynamic training environment/procedures and AI patient that mirrors real-life obstetric situations. They will carry out research with medical faculty and students, gain hands on experience in VR/MR and generative AI (Gen-AI) development, and perform user study to validate the efficacy of the simulations.

The project will be supervised by Associate Professor Khoo Eng Tat and supported by the research fellow and engineers from the Immersive Reality Lab. It will be carried out in collaboration with Assistant Professor Gosavi Arundhati Tushar from the Department of Obstetrics & Gynaecology, NUS Yong Loo Lin School of Medicine. Example of immersive reality (VR/MR) and generative AI (Gen-AI) student projects completed under Immersive Reality Lab can be found on the website https://cde.nus.edu.sg/edic/projects/innovating-with-immersive-reality/.

Project supervisor: Dr Cai Shaoyu (shaoyucai@nus.edu.sg)

Industry partner/collaborator: NUS Libraries

In this project, you will create immersive narratives for Singapore's hawker culture, which was inscribed as the country's first element on the UNESCO Representative List of Intangible Cultural Heritage, using new interactive media (e.g., VR, 360) to transport users to the era of traditional hawker centres, complete with classic hawker foods and stalls.

Project supervisor: Dr Cai Shaoyu (shaoyucai@nus.edu.sg)

In this project, you will design and develop a small and lightweight wearable olfactory display that heats solid perfume materials (e.g., wax) to emit scents through heating approaches (e.g., Peltier-based heating, Laser-based heating) for precise scent generation of multiple odours.

Project supervisor: Dr Cai Shaoyu (shaoyucai@nus.edu.sg)

In this project, you will design a wearable haptic interface for providing haptic feedback through IoT and smart hardware to build immersive experiences for multi-sensory VR/AR, which incorporates visual, auditory and haptic feedback in specific virtual scenarios.

Innovations in Intelligent Systems

Projects in this theme focus on the design of complex engineering systems and automation for various applications.

Project supervisors: Mr Soh Eng Keng (ek.soh@nus.edu.sg)

Industry partner/collaborator: CEVA Logistics

Background

During packing operations, the product volumes are so consequent that saving time on a single operation can allow the whole process to gain huge efficiency. Manually pushing carton boxes onto a conveyor belt is time-consuming and can be automated.

Our project aims at bringing a new design to make our automated kitting process more efficient. The proposed system should automate product placement onto the conveyor belt (which transports them to a robotic arm performing the kitting).

Project objectives

Create an automated feeder design that will allow operators to load the products by putting a large number of products on a flat surface. The feeder should be able to push the carton boxes by groups (or lines) onto the conveyor belt automatically. The products will then flow to the kitting station equipped with a robotic arm. The objective of this project is to create a working prototype of this design.

Project scope

- Review current similar technologies that are relevant to this specific use case

- Propose and evaluate new design concepts for the automated feeder

- Select the most promising design concept, and prototype and test the proposed solution with real products

- Acquire and analyze data to assess the new design efficiency

Project supervisors: Mr Soh Eng Keng (ek.soh@nus.edu.sg), Mr Keith Tan (keithtcy@nus.edu.sg)

Industry partners/collaborator: Durapower Group

Background

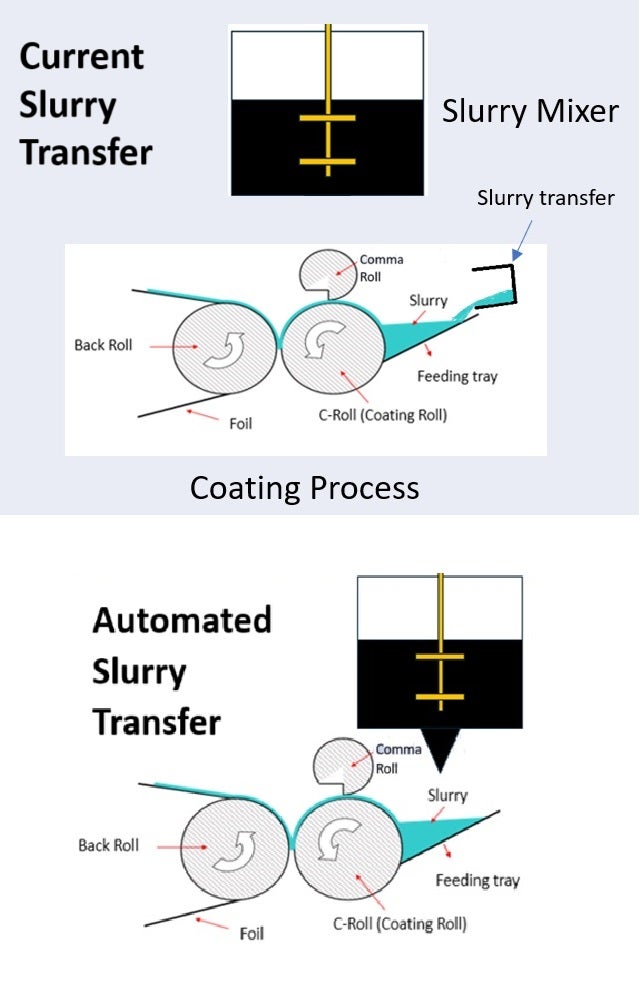

This project is related to the electrode coating process of battery foil of a research lab in Singapore. The first step of the process is mixing of the slurry, after which the slurry is transferred out from the mixer pot, sieved, and placed into a bowl in preparation for the second step, coating. During coating, only a small amount of slurry is transferred onto the feeding tray and the remaining left in the bowl may start to experience changes to its properties (e.g., change in viscosity, settling and agglomeration of particles). These changes may impact the quality and consistency of coating for large volume of coating.

The current process of ensuring consistency of the coating is by stirring the slurry manually throughout the whole coating process, which is tedious and time-consuming. Automation may help to improve the efficiency, consistency and labour cost of the process.

Project objectives

- Understand the electrode fabrication process

- Automate the slurry transfer process from the mixer to the coating machine

The automated slurry mixer must have the following capabilities to ensure consistent coating:

- Dosing capability

- In-situ viscosity monitoring

Project scope

Propose and evaluate new design concepts for recipient/attachment with the following functions:

- Automated mechanical stirring of slurry

- Automated dosing of slurry

- In-situ measurement of viscosity

Select the most promising design concept, and prototype and test the proposed solution.

Project supervisor: Mr Eugene Ee (wheee@nus.edu.sg), Mr Soh Eng Keng (ek.soh@nus.edu.sg), Dr Goh Shu Ting (elegst@nus.edu.sg)

The CanSat competition is an annual event for university teams to compete in a space related design-build-launch challenge. This project is looking to bring together passionate students to put together a working CanSat that fulfills the competition needs.

In depth competition details can be found in this link: https://cansatcompetition.com/

Project supervisor: Prof Goh Cher Hiang (gcherhia@nus.edu.sg)

Industry partner/collaborator: dConstruct Robotics

Portable 3D mapping systems are increasingly used for rapid, flexible surveying across diverse environments, from indoor spaces and multi-floor built structures to outdoor plazas and parkland. However, commercially available LiDAR scanners usually rely on single-axis sweeping LiDAR mechanisms with limited vertical coverage, typically around 70°, requiring multiple passes or additional sensors to fill blind spots. This limitation affects mapping efficiency and compromises the completeness of generated 3D maps. To address this, dConstruct Robotics is developing the next generation of portable mapping systems through this project, which aims to design and integrate a compact, lightweight revolving 3D LiDAR module that can directly replace the regular scanner on their existing handheld platform. The objective is to expand vertical scan coverage by adding a secondary axis of revolution while maintaining low system weight and small form factor, and implement real-time motion correction and de-skewing to ensure consistently high-quality, dense, and accurate 3D point cloud maps. Key deliverables include a fully functional rotating LiDAR prototype equipped with processing capability for motion compensation and de-skewing, seamlessly interfacing with dConstruct’s platform for downstream mapping and data deployment.

Project supervisors: Mr Nicholas Chew (nickchew@nus.edu.sg), Mr Graham Zhu (graham.zhu@nus.edu.sg)

The Innovation & Design Hub currently has 2 Sesto autonomous mobile robots (AMRs) in which one of them is under utilized. A standalone AMR has limited applications but an industrial arm manufacturer (Mitsubishi Electric) has proposed to attach their cobot arm onto the AMR in order to promote their newly-developed ROS 2.0 drivers. Integrating the Mitsubishi cobot arm onto the AMR will transform the AMR into a mobile manipulator (often seen in industry) with greater potential for more applications within a non-manufacturing environment such as the Hub.

Project objective: develop a working mobile manipulator for Innovation & Design Hub in order to grip tools from the tooling rack and transport it to users at the Sandbox side.

Project scope:

- Design and manufacture a stable base with necessary power electronics for cobot arm mounting

- Design and prototype of tool changer with end effector gripper

- Interfacing of Sesto AMR with Mitsubishi cobot arm through ROS 2.0 framework

- Programming of mobile manipulator unit using ROS 2.0 publisher-subscriber model to accomplish end objective

- (Optional) Potential for machine vision capabilities depending on student’s motivation

Project supervisors A/Prof Lim Li Hong Idris (lhi.lim@nus.edu.sg)

Industry partner/collaborator: Clemvision

This year-long project seeks to achieve the following objectives:

- Conceptualize and Design: Develop the foundational software architecture of an existing all-terrain mobility robot, including the controller and sensors.

- Prototype and Test Mobility Systems: Build and test prototype systems for a range of potential applications.

- Integrate AI for Autonomy: Develop and deploy AI algorithms for autonomous navigation, obstacle avoidance, and environmental analysis. Key functionalities include real-time terrain mapping, adaptive pathfinding, and decision-making in complex scenarios.

- Ensure Communication and Control: Implement IoT-enabled communication protocols for remote monitoring, real-time feedback, and control via centralized platforms.

- Conduct Comprehensive Testing and Refinement: Perform rigorous testing across diverse terrains to validate performance and reliability. Refine design and algorithms based on data-driven insights gathered during testing.

Project supervisor: Mr Nicholas Chew (nickchew@nus.edu.sg)

Industry partner/collaborator: Epson Robotics

As industries increasingly focus on labelling hazardous chemicals, the importance of operator safety has become a top priority. Chemical substances, especially carcinogenic ones, pose significant health risks. Traditionally, operators are required to wear Personal Protective Equipment (PPE) while interacting with these chemicals. However, with Epson Robotics, we have an innovative solution: robotizing the labelling process. This allows operators to stay safely away from the hazardous zone - enabling them to control the robot from a distance, or even from the comfort of their homes. This project not only enhances operator safety but also promotes the future of remote work in hazardous environments.

Project Objectives:

- Easy Setup & Stable Operation: Streamlining the setup process for both local and international exhibitions, ensuring the system is easy to demonstrate and deploy, even in dynamic environments.

- Robot as Master Control: Removing the Mitsubishi PLC and HMI control, and making the Epson robot the central control unit. The robot will be operated using Epson’s advanced Graphic User Interface (GUI) on a standard monitor, simplifying the user experience.

- Enhanced Printing Workflow: Transitioning from ASCII code stored in the HMI to using an IPC/PC to trigger print jobs to the Epson ColorWorks printer. This shift will ensure a smoother, more stable operation by directly linking the robot and the printer.

- Cycle Time Optimization: While the current system achieves a print rate of 1600 prints per hour, the robot's operation speed is limited by the Epson ColorWorks printer’s printing speed. The project will focus on optimizing the cycle time to maximize throughput.

- Turntable Stabilization & Optimization: The current system uses a turntable for box orientation. We aim to improve the stability and efficiency of this mechanism, enabling smoother and faster operations.

Project supervisor: Dr Cai Shaoyu (shaoyucai@nus.edu.sg)

Industry partner/collaborator: Tan Tock Seng Hospital

The proposed project aims to develop an advanced haptic rendering system designed specifically to simulate precise hand movements encountered during surgical procedures for Da Vinci Surgical Training. By generating realistic force feedback, the system enables novices, such as medical trainees and surgical residents, to accurately perceive tactile sensations associated with various surgical interactions.

Project supervisor: Dr Elliot Law (elliot.law@nus.edu.sg)

Industry partner/collaborator: Temasek Laboratories, NUS

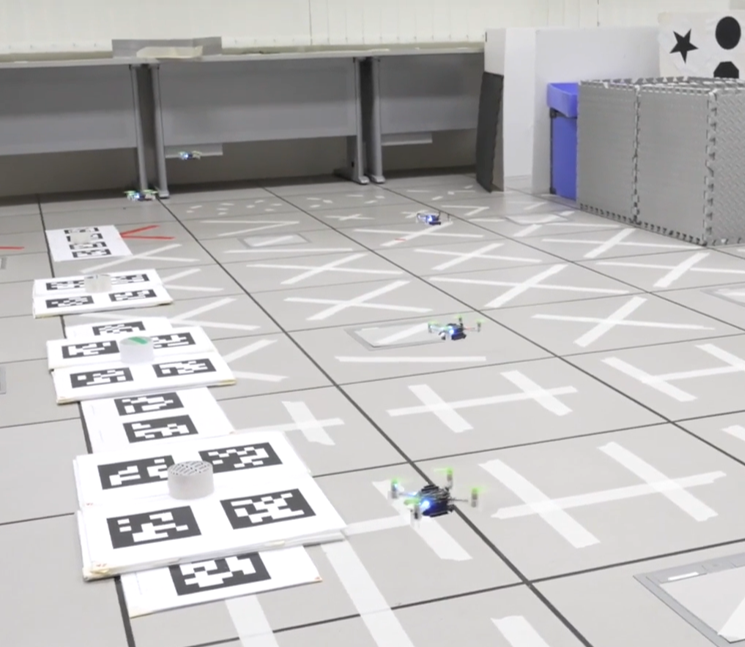

A swarm of drones is particularly useful for disaster response missions. In addition to being able to operate in hazardous environment, they can also search large areas more quickly and efficiently than a few human rescuers. The goal of this project is to develop and integrate the necessary algorithms to automate a swarm of drones to rapidly and effectively search and locate disaster scenario victims in indoor environment.

The project team will be expected to participate in the 2026 Singapore Amazing Flying Machine Competition (Category E event on Swarm) which will be held sometime in March 2026. Building on the work of previous teams, students in this project will explore state-of-the-art techniques in computer vision, machine learning, and swarm robotics. Students will develop or improve algorithms to optimise the search strategy of the drones and enable them to adapt to the dynamic and unpredictable environment.

The following areas of work will be covered in this project:

- Swarm control and command

- Search strategy

- Localisation

- Collision avoidance and object detection

- Simulation

Project supervisor: Dr Tang Kok Zuea (kz.tang@nus.edu.sg)

Industry partner/collaborator: Singapore General Hospital

With the rapid adoption of automation in industrial sectors including healthcare, warehousing and manufacturing, there are various solutions that offer integration of lifts and also contactless interfaces for human users and autonomous mobile robots. However, challenge is that there are many different lift models that are installed in buildings, making integration with such solutions difficult. Customised and built-in solutions are costly and not easily reconfigurable. On the other hand, there are also several standards for Automated Mobile Robots (AMRs) to integrate with the lifts. Such communication standards for the AMRs and lifts have to be customised. To address the concerns of the safety issues and the implementation cost and time, we would like to propose an open standard to ‘smart-enable’ lift system so as to facilitate integration with different lift models and AMRs.

Project supervisor: Dr Aleksandar Kostadinov (aleks_id@nus.edu.sg)

Background

Handling delicate plant structures in automated farming systems remains a major challenge. Traditional grippers often damage plants or fail to adapt to varied morphology. Soft robotics offers a promising alternative, but practical deployment is still limited.

Aims

To design, prototype, and evaluate soft-material-based end effectors capable of gently and adaptively handling plants, flowers, or fruits in an agricultural automation context.

Methodology

Research will begin with a review of existing soft robotic grippers and plant handling challenges. The team will explore materials (e.g. silicone, fabric composites), develop design concepts using CAD, and fabricate prototypes via 3D printing and moulding. Testing will focus on grip adaptability, force distribution, and plant safety.

Deliverables

- Literature review summary

- Concept sketches and CAD models

- Functional prototype(s)

- Evaluation results (e.g. force sensors, plant damage analysis)

- Technical report and showcase poster

Note:

The proposed topic, methods, and deliverables are tentative and intended as a starting point. Depending on the team’s size, interests, and strengths, both the direction of the project (research or development) and its specific outputs (hardware, software, principles) can be adapted accordingly. Students are encouraged to explore related avenues if they find them more compelling.

To support this process, a selection of handbooks and manuals is provided to help kickstart research and frame initial explorations:

- Robotics and automation for improving agriculture | John Billingsley |

- Handbook on Soft Robotics | SpringerLink

- Application of Soft Grippers in the Field of Agricultural Harvesting: A Review

- Perceptual Soft End-Effectors for Future Unmanned Agriculture

- Robotic arms in precision agriculture: A comprehensive review of the technologies, applications, challenges, and future prospects - ScienceDirect

Self-proposed projects for CDE4301

If you are interested to propose your own project, please submit a brief proposal (1-2 pages) describing the motivation, intended objective, scope, and deliverables of your self-proposed project to idp-query@nus.edu.sg. You will also need to identify a suitable supervisor for your project. You may also select this option if you are currently on industrial attachment (IA) and would like to extend your internship project for CDE4301.

CDE4301A Ideas to Start-up

Students who are interested to sign up for CDE4301A can also do so via the online project selection form (the link is provided above) and provide their project proposals. They can sign up for CDE4301A as a group or as individuals, and individual students may form a group at the beginning of their CDE4301A project.

For questions about CDE4301A, please contact the course coordinator A/Prof Khoo Eng Tat (etkhoo@nus.edu.sg) or send an email to idp-query@nus.edu.sg.