At the Department of Biomedical Engineering, College of Design and Engineering, National University of Singapore, Assistant Professor Jin Yueming designs algorithms that bring intelligence closer to the way clinicians think and act. Her research is an amalgamation of computer vision and biomedical science, where she links large AI models with the subtleties of medical practice. Across a suite of impactful projects, she explores how AI can interpret, reason, and even anticipate medical decisions, supporting clinicians in making accurate diagnoses and interventions with greater reliability and transparency.

Adapting AI generalists for precise medical perception

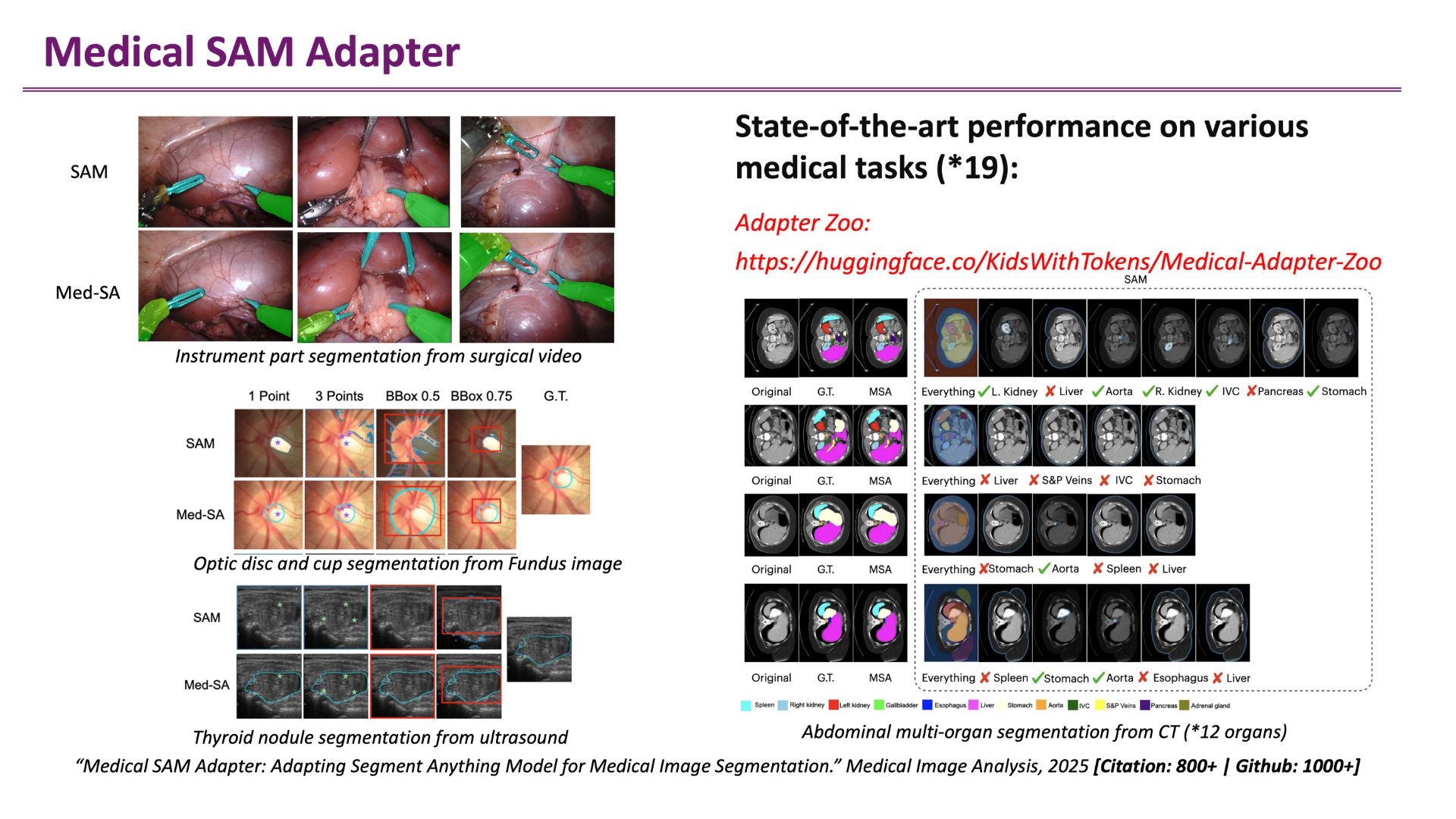

Asst Prof Jin’s recent study (MedIA 2025) begins with a challenge familiar to clinicians who have used AI tools for medical image perception. The models that excel in parsing everyday photographs do not perform as well when it comes to medical scans, where the contrasts are faint and the structures are unpredictable. Retraining a model from scratch for each new dataset is also costly and time-consuming.

Asst Prof Jin’s team took a different approach instead. Rather than rebuilding a model, they built an ‘adapter’ for an existing model. The method modifies only a small fraction of parameters within the Segment Anything Model (SAM) — a powerful model originally trained on natural images — while guiding it to read computer tomography (CT), magnetic resonance imaging (MRI) and ultrasound scans. These additional steps allow SAM to learn the medical ‘dialect’ of imaging without rewriting its entire vocabulary, resulting in the Medical SAM Adapter, or Med-SA.

Compact and efficient, the Med-SA achieved superior performance across 17 segmentation tasks involving diverse organs and imaging modalities. It showed that a large foundation model could be tuned to clinical precision with minimal overhead. “We wanted clinicians to interact with segmentation as naturally as they perceive a medical image,” Asst Prof Jin said. “It should be immediate, intuitive and responsive to their expertise, which is exactly what Med-SA is meant to deliver.” The work has 800+ citations and 1200+ GitHub stars, reflecting the wide adoption by researchers and developers.

“Our simple yet effective adapter technology has been widely recognised as an important AI architecture that facilitates the development of large foundation models for medical image segmentation,” she added.

Unifying and extending perception across diverse domains

If Med-SA makes a specialist out of a generalist, another of Asst Prof Jin’s models, Pro-NeXt (TPAMI 2025), pursues the inverse ambition. Fine-grained visual classification covers tasks that demand expert ‘eye’: differentiating disease types, grading fabrics in fashion or identifying subtle styles in art. Traditional models trained for each domain are often siloed and rarely transfer well to another. Pro-NeXt introduces a unified architecture, which can recognise fine visual distinctions across professional domains, that performs strongly across 12 datasets spanning five fields, from medicine to design.

Fine-grained visual classification covers tasks that demand expert ‘eye’: differentiating disease types, grading fabrics in fashion or identifying subtle styles in art. Traditional models trained for each domain are often siloed and rarely transfer well to another. Detailed in another study,

The model learns the subtle visual logic shared across the fields, while staying transparent enough for human specialists to follow its reasoning. Its versatility offers an exciting look into a future in which a single AI backbone could support multiple sectors without fragmenting into countless task-specific versions. For hospitals, that means diagnostic tools that adapt as easily as clinicians rotate between cases.

“This study, as a single unified model, dramatically simplifies the way AI can be used across diverse tasks and settings, from glaucoma screening to COVID-19 diagnosis. Our unified model rapidly sharpens diagnosis at an unprecedented level and facilitates real-world deployment of AI in hospitals,” added Asst Prof Jin.

From perception to reasoning: building interpretable agentic intelligence

Supporting clinical diagnosis and treatment requires more than just precise perception — AI with reasoning ability can further enhance both accuracy and interpretability, making it more intuitive and user-friendly for clinicians. Large language models have begun to show signs of competence in this exercise, but alas, they often exhibit weaknesses in complex medical decision-making that require context, evidence, and discipline.

Asst Prof Jin further developed Agentic Reasoning (ACL 2025), an agentic AI framework to resolve this limitation with restraint. This system consists of three core agents: web search, code execution and structured memory. Together, they form a minimalistic yet coherent toolkit for problem-solving. The model can search for information, test ideas through code and map out relationships among facts.

On standard reasoning benchmarks and in a clinical case study, the framework outperformed more elaborate systems that utilised increasingly complex tools. When evaluated on a real medical treatment-planning scenario, its outputs were reviewed by practising clinicians, who found them clear and practical. “We focused on how people reason through complex tasks. Therefore, our method can intuitively and effectively automate complex clinical tasks, enhancing the accuracy with greater interpretability,” said Asst Prof Jin. “We did not set our sights on mimicking intelligence, but making AI a disciplined and reliable collaborator.”

From reasoning to anticipation: intelligent navigation and clinical foresight

Apart from understanding and reasoning, predicting future states is also highly beneficial for assisting clinical decision-making, such as generating next surgical actions for navigation, or simulating future disease states for planning. Asst Prof Jin further developed an anticipation model, MSTP (NeurIPS 2025), to ask AI to foresee what comes next.

The developed model, Multi-scale Temporal Prediction (MSTP), examines events across multiple time horizons: phase, step, and individual action. It generates these predictions incrementally, assembling them like scenes of a film as the operation unfolds. To maintain coherence in its forecasts, it utilises a network of collaborating AI agents that verify one another’s work, ensuring consistency between near-term and long-term expectations.

The system was tested on recordings of real surgical workflows, producing predictions that aligned more closely with expert assessments than conventional methods. “This predictive AI system can support surgical navigation and facilitate surgical robotic automation, therefore, reduce surgeons’ workload, streamline operating room workflows and shorten patient wait times,” added Asst Prof Jin. “Beyond the walls of the operating theatre, the model could be implemented in eldercare, where anticipating human abnormalities is essential for improving living safety.”

In the operating theatre, timing is everything. Surgeons keep their fingers on the pulse of a procedure through images, gestures and glances exchanged across the room. Every scan, incision and robotic assist produces streams of visual data that are dense, complex and relentless. For artificial intelligence (AI) to make itself useful in this dynamic and difficult environment, it must learn to perceive not only what it sees, but why it matters.

Asst Prof Yueming Jin has developed a series of pioneering medical AI works, enabling AI to act as a skilled collaborator to clinicians—advancing clinical workflows from enhanced perception to reasoning and anticipation. Her work exemplifies her commitment to creating technologies with substantial practical value and societal impact.