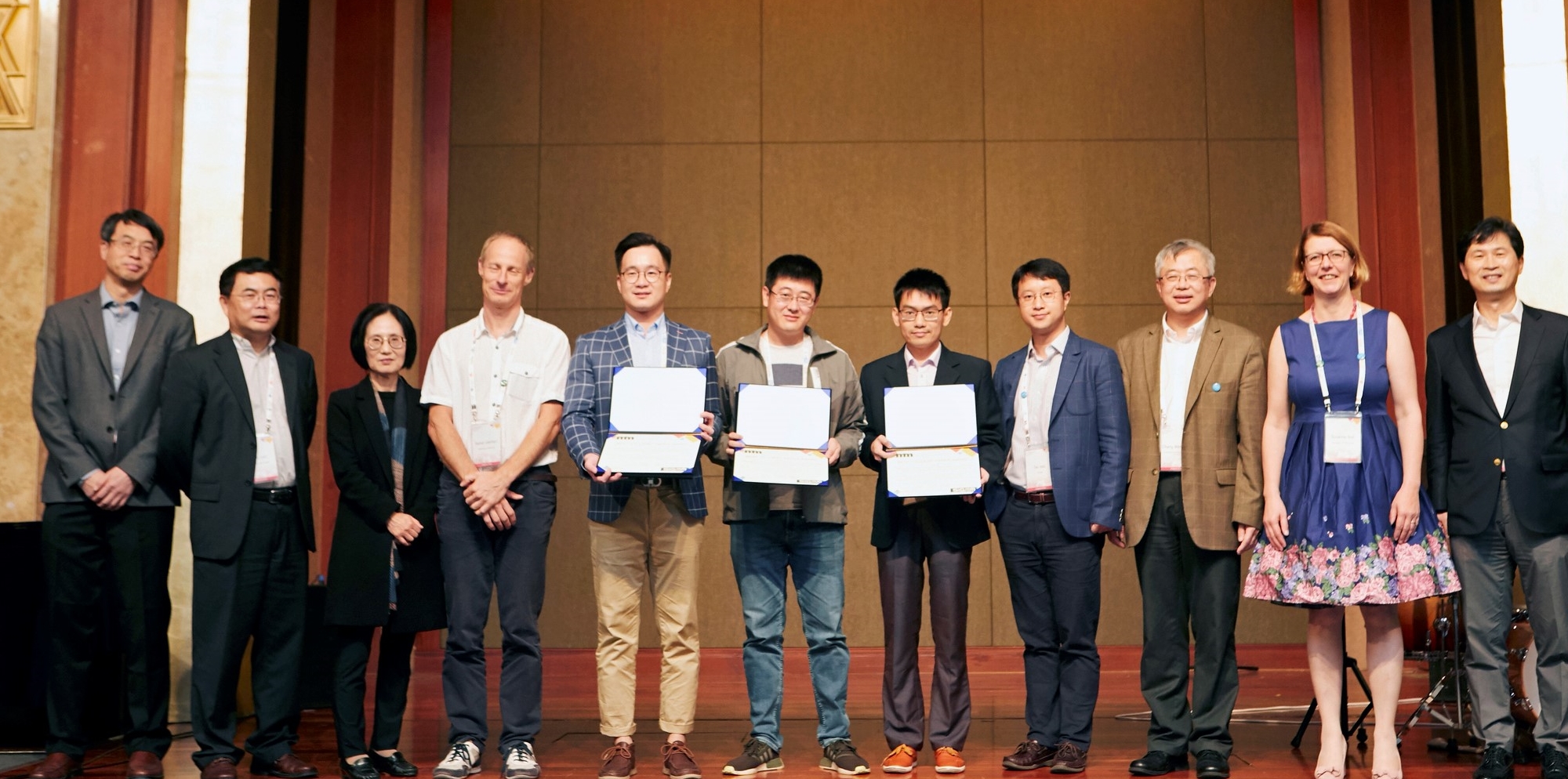

The National University of Singapore (NUS) Learning and Vision (LV) team clinched the Best Student Paper Award at ACM MM 2018. The award ceremony was held in Seoul, Korea on 24 October 2018.

The project was a collaboration between the NUS ECE Department’s LV group (Assist. Prof. Jiashi Feng, Assoc. Prof. Shuicheng Yan, Mr. Jian Zhao, Mr. Jianshu Li, Mr. Yu Cheng) and NUS SOC Department’s Associate Professor Terence Sim.

ACM Multimedia (ACM MM) is the Association for Computing Machinery (ACM)’s annual top conference on multimedia, sponsored by the SIGMM special interest group on multimedia in the ACM. SIGMM specialises in the field of multimedia computing, from underlying technologies to applications, theory to practice, and servers to networks to devices.

Their paper, “Understanding Humans in Crowded Scenes: Deep Nested Adversarial Learning and A New Benchmark for Multi-Human Parsing”, was one of 209 papers accepted for the conference and one of four papers shortlisted for the prestigious award. They developed a new method for computers to recognize and visually understand people in photos and videos. “While there has been a noticeable progress in the way computers perform perceptual tasks, computers still struggle with visually understanding humans in crowded scenes,” explained Jian Zhao.

To combat this problem, the team developed a large-scale database of over 25,000 elaborately annotated images of people in groups of varying sizes, poses and interactions captured in real-life scenarios. The team also developed a new recognition model that can detect and segment body parts and fashion items in a crowd scene, while still being able to differentiate different identities of the subjects. The team believes that their new model and database will help drive further research and development into areas such e-commerce, person re-identification, image editing, autonomous driving, and virtual reality.

“Our research has shown that our model outperforms current existing state-of-the-art solutions on our database and several other databases. Furthermore, our work tackles a novel situation with an original method and our dataset can contribute to further research into this topic,” Jian Zhao added.

At the conference, Jian Zhao and Jianshu chose to present their research in an unconventional method – using stories and cross-talk. “We prepared for the presentation for a long time and we were quite pleased with the number of good responses we received from the audience after our presentation,” said Jian Zhao. “We were happy that we achieved our goal of making our work memorable and I felt that the time spent preparing it was all worthwhile in the end.”